It was 11:47 PM on a Tuesday, and Sarah—a freelance content strategist—was four hours deep into research for a client’s healthcare campaign. She’d been bouncing between ChatGPT, Claude, secure AI memory for personalized AI chats and Gemini, feeding each AI the same context: “I’m working on a campaign for diabetic patients, my client prefers conversational tone, secure AI memory for personalized AI chats and we need to avoid medical jargon.”

Then her laptop crashed.

When she reopened everything twenty minutes later, every AI had forgotten. The context was gone. The preferences, vanished. She had to start from scratch—again.

Sound familiar? If you’ve ever felt like you’re training your AI assistant from zero every single session, you’re not alone. And you’re experiencing one of the most frustrating limitations of modern AI: the memory problem.

But here’s what most people don’t realize: secure AI memory for personalized AI chats isn’t just about convenience. It’s about transforming how AI actually understands and serves you—secure AI memory for personalized AI chats while keeping your data safe from the privacy nightmares making headlines.

The $2.5 Billion Problem Nobody’s Talking About

Let’s talk about what’s really happening when your AI “forgets” you.

Traditional AI chatbots operate in what developers call a “stateless” mode. Each conversation exists in its own bubble. When you close that chat window, poof—everything you told the AI evaporates. It’s like having a brilliant assistant who gets amnesia every time you leave the room.

The cost? A recent enterprise study found that knowledge workers waste an average of 2.3 hours per week re-explaining context to AI tools. That’s 120 hours per year. For a company with 100 employees using AI daily, that’s $2.5 million in lost productivity annually (at an average hourly rate of $45).

But the problem runs deeper than wasted time.

Why Most AI Chats Feel Like Groundhog Day

Think about your last interaction with an AI chatbot. Maybe you asked for help writing a blog post. The AI probably asked: “What’s your target audience?” “What tone do you prefer?” “What’s the word count?”

Now imagine having that exact same conversation tomorrow. And the day after. And again next week.

This is the reality for millions of professionals using AI daily. The technology is brilliant, but it has no institutional memory. It doesn’t remember that you’re a vegetarian, that you code in Python, that you prefer data-driven examples over abstract theory, secure AI memory for personalized AI chats or that you’re building a SaaS product for small businesses.

Every. Single. Time. You start from scratch.

What Secure AI Memory for Personalized AI Chats Actually Means

Here’s where things get interesting—and complicated.

Secure AI memory for personalized AI chats is the ability of an AI system to remember your preferences, context, and past interactions across sessions while protecting that information with enterprise-grade security measures.

But “memory” in AI isn’t like human memory. When implemented properly, it’s actually far more powerful—and far more vulnerable if not properly secured.

The Three Types of AI Memory You Need to Understand

Short-term memory handles the immediate conversation. It’s what allows an AI to remember what you said three messages ago within the same chat session. This typically exists only within the context window—usually the last few thousand words of conversation.

Session memory bridges multiple exchanges within a bounded timeframe or project. It might remember details from earlier today or this week, but it’s designed to expire. Think of it as your AI’s working memory.

Long-term memory is the game-changer. This is where an AI permanently stores key information about you: your professional role, communication style, project details, preferences, and accumulated knowledge from hundreds of past interactions. Done right, this transforms an AI from a tool into a true assistant that evolves with you.

The problem? Most AI platforms don’t offer real long-term memory. And the few that do often have serious privacy issues.

The Privacy Nightmare Keeping Security Experts Awake

In October 2024, a Stanford study exposed something alarming: six major AI companies were feeding user conversations back into their training models by default. Your private chats—including sensitive medical questions, business strategies, secure AI memory for personalized AI chats and personal information—could potentially be seen by other users or exposed in data breaches.

One documented case involved shared ChatGPT links appearing in Google search results, exposing private conversations to anyone with the link. Another incident saw healthcare workers accidentally sharing patient information with AI chatbots that weren’t HIPAA compliant, resulting in serious violations.

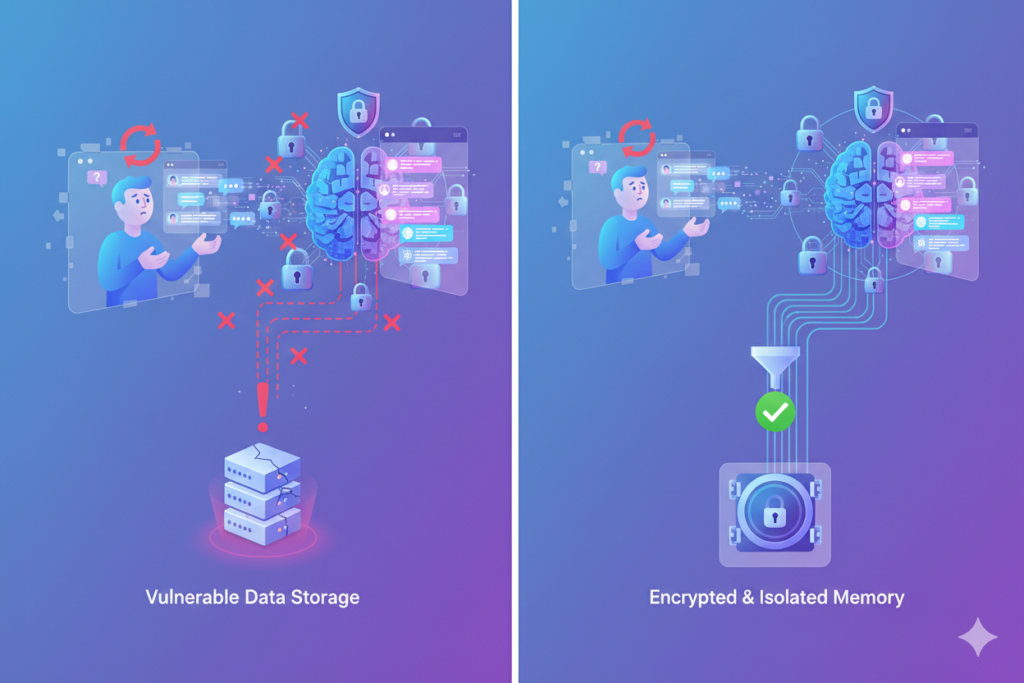

The core issue with secure AI memory for personalized AI chats boils down to three critical vulnerabilities:

Data Storage Risks

When AI platforms store your conversation history secure AI memory for personalized AI chats and preferences on remote servers, that data becomes a target. A 2025 study by Concentric AI found that generative AI tools exposed an average of three million sensitive records per organization in the first half of the year alone.

Your AI knowing you prefer low-sugar recipes seems harmless. But when that information gets stored indefinitely, it can be used to infer health conditions, which could then cascade to insurance companies, advertisers, secure AI memory for personalized AI chats or worse—data breaches exposing medical vulnerabilities to cybercriminals.

Training Data Contamination

Some AI companies use your conversations to train future models. That client proposal you asked the AI to improve? secure AI memory for personalized AI chats It might now be part of the system’s training data, potentially regurgitating elements of your proprietary strategy to competitors.

This isn’t theoretical. Companies have already banned employees from using certain AI tools specifically because of this risk.

The Shadow AI Problem

Perhaps most concerning is “shadow AI”—when employees use unapproved AI tools without IT oversight. Reports suggest that 68% of employees who use ChatGPT at work do so without supervisor approval, secure AI memory for personalized AI chats and roughly 80% of AI tools in enterprises operate without IT security oversight.

This creates massive exposure. When secure AI memory for personalized AI chats isn’t properly implemented, one employee’s careless prompt could expose an entire company’s intellectual property.

How Developers Are Solving the Memory Problem (And Where They’re Falling Short)

The AI industry recognizes the memory problem. Several solutions have emerged, each with tradeoffs:

Personal.AI lets users “stack memories” by uploading documents and past conversations. The AI learns from these stacks to provide more personalized responses. However, users report concerns about data portability secure AI memory for personalized AI chats and what happens to their memory stacks if they want to switch platforms.

Mem0 offers an API-based memory layer for developers building AI applications. It’s powerful and technically sophisticated, but requires technical expertise to implement and doesn’t solve the problem for everyday users.

Perplexity’s Memory Feature automatically extracts key preferences from conversations and stores them for future context. It’s seamless, but users have limited visibility into what’s being remembered secure AI memory for personalized AI chats or how to control it.

The common denominator? These solutions either require you to commit fully to a single platform (creating vendor lock-in), demand technical expertise most users don’t have, or don’t give you enough control over your own data.

What Actually Works: A Multi-Model Approach to Secure AI Memory

Here’s what most guides on secure AI memory for personalized AI chats won’t tell you: the most powerful solution isn’t choosing a single AI with memory—it’s having a unified platform where multiple AIs can access shared, secure memory while competing to serve you better.

This is where the conversation gets practical.

Imagine this workflow: You’re working on a complex project—let’s say launching a new B2B SaaS product. You need market research, competitor analysis, content strategy, technical documentation, secure AI memory for personalized AI chats and financial projections.

Instead of training five different AI tools on your project’s context separately, you have one secure memory layer that any AI model can access (with your permission). You create a project folder containing:

- Your company’s mission and values

- Target customer profiles

- Preferred communication tone

- Technical stack details

- Past decisions and their reasoning

- Industry-specific terminology

Now, when you ask Claude to draft technical documentation, it already knows your stack. When you ask ChatGPT to create marketing copy, it understands your audience. When you use Gemini for data analysis, it recognizes your business model.

All three AIs work from the same secure memory foundation—secure AI memory for personalized AI chats but each brings its unique strengths to different aspects of your project.

Real-World Implementation: How Teams Are Actually Using This

The Solo Founder: Marcus is building an AI-powered scheduling app. He uses secure AI memory for personalized AI chats through AiZolo’s project system, where he’s created a dedicated workspace for his startup. He’s stored his product roadmap, code architecture decisions, secure AI memory for personalized AI chats and customer interview insights in custom prompts that persist across all AI models.

When he needs to write investor emails, Claude already knows his fundraising goals and company story. When debugging code, GPT-4 has context on his entire codebase structure. When analyzing user feedback, Gemini understands his product vision and priorities.

Result: Marcus saves 15+ hours weekly by never re-explaining his project. More importantly, the quality of AI responses improved dramatically because every model has full context.

The Marketing Agency: A four-person agency manages twelve clients simultaneously. They created separate project folders for each client in AiZolo, each containing:

- Brand guidelines and voice

- Past campaign performance data

- Approved messaging frameworks

- Target audience insights

- Content calendars

When any team member needs AI help with client work, they simply open that client’s project. The AI immediately has full context—regardless of which team member last worked on the account or which AI model they’re using.

Their clients have noticed: “It feels like you have a 20-person team because your work is so consistent secure AI memory for personalized AI chats and contextually on-point.”

The Developer Team: A small development team maintains secure AI memory for personalized AI chats by creating project folders for different codebases. Each folder contains:

- Code style guides

- Architecture decision records

- Common bug patterns and solutions

- Performance benchmarks

- Security requirements

Junior developers can now get AI help that’s contextually aware of senior developers’ past decisions. The AI doesn’t just generate code—it generates code that fits the team’s established patterns and practices.

The AiZolo Approach: Security Meets Flexibility

This is where we need to have an honest conversation about how AiZolo handles secure AI memory for personalized AI chats—because the approach is different, and deliberately so.

Most AI platforms force a choice: complete memory integration with privacy concerns, or complete privacy with no memory. AiZolo takes a third path designed for professionals who need both.

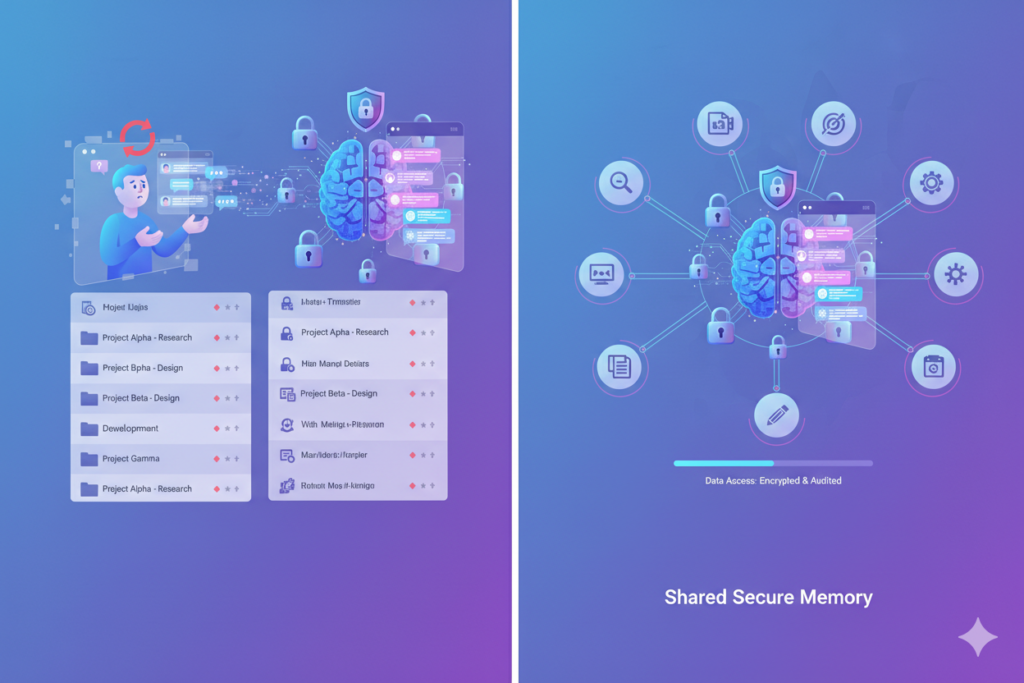

Project-Based Memory Architecture

Instead of storing everything in one massive memory bank, AiZolo uses project-based organization. You create separate projects for different contexts:

- Client work

- Personal projects

- Research initiatives

- Learning and development

Each project has custom system prompts where you define the context, preferences, and knowledge that specific project needs. This memory persists across all AI models within that project, but remains isolated from other projects.

The advantage? Compartmentalization. Your healthcare client’s sensitive information never mixes with your personal writing projects. Your employer’s proprietary data stays separated from your freelance work.

Encrypted API Key Support

For users with their own API keys, AiZolo offers an even more secure approach. Your conversations pass through encrypted channels directly to OpenAI, Anthropic, or Google—bypassing AiZolo’s servers entirely for the actual conversation content. AiZolo provides the interface and organization, but your most sensitive data never touches their infrastructure.

This creates what security experts call “zero-knowledge architecture” for API key users.

Cross-Model Memory Access

Here’s where it gets powerful: within each project, any AI model can reference the custom context you’ve set. This means:

- You define your context once

- All AI models have access to that same foundational memory

- You can compare how different models use that context

- The best answer wins, regardless of which AI generated it

You’re not locked into a single AI’s interpretation of your needs. If Claude understands your technical requirements better but ChatGPT generates better marketing copy, you get the best of both—both working from the same secure memory foundation.

Beyond Storage: What Makes Memory Actually “Secure”

Let’s get technical for a moment, because “secure” gets thrown around too loosely in AI marketing.

True secure AI memory for personalized AI chats requires multiple layers of protection:

Encryption at rest and in transit means your stored preferences and conversation history are encrypted when stored on servers and when moving across networks. This is baseline—if an AI platform doesn’t offer this, walk away.

Access control isolation ensures your memory is accessible only to your authenticated account. Sounds obvious, but some chatbot platforms have had vulnerabilities where session hijacking could expose user memories.

Data retention policies define how long information is stored and what happens when you delete it. Can you truly delete your memories? Are they purged from backups? From training datasets? These questions matter.

Anonymization capabilities allow sensitive data to be stored in formats that can’t be traced back to you personally. For instance, storing “user prefers morning meetings” rather than “John Smith at ABC Corp prefers morning meetings.”

Audit trails track what information is accessed and when. For enterprise users, this is critical for compliance and detecting unauthorized access.

The reality is that no system is perfectly secure. But platforms that combine these protections with user control—letting you decide what to remember, what to forget, and what to keep completely offline—offer practical security for real-world use.

The Five Questions You Should Ask Before Trusting AI Memory

Before you entrust any AI platform with your secure AI memory for personalized AI chats, ask these questions:

1. Can I export my memory and take it elsewhere? Data portability matters. If you can’t easily migrate your accumulated context to another platform, you’re creating vendor lock-in. Ask how memory export works and in what format.

2. What happens to my data if I delete my account? Some platforms promise deletion but keep data in backups indefinitely. Others feed deleted content into training models before removal. Get specific answers.

3. Can I see exactly what the AI remembers about me? Transparent memory management means you can view, edit, and delete specific memories. If the system makes inferences about you, can you see and correct them?

4. Is my conversation history used for training? This is the deal-breaker question. If the answer is “yes, by default” or unclear, proceed with extreme caution.

5. What compliance standards does the platform meet? For professional use, look for SOC 2, GDPR compliance, and industry-specific standards like HIPAA for healthcare. These aren’t just badges—they represent audited security practices.

The Future Is Personalized (And Hopefully Private)

We’re at an inflection point with AI memory technology. The systems that will dominate the next five years won’t necessarily be the ones with the longest context windows or the largest training datasets. They’ll be the ones that solve the personalization-privacy paradox.

Early signals suggest the market is moving toward:

User-controlled memory graphs where you can visualize and manage what your AI knows about you in intuitive interfaces, not buried settings pages.

Federated learning approaches that allow AI to learn from your usage patterns without centralizing your raw data on remote servers.

Cryptographic memory storage using technologies like homomorphic encryption, allowing AI to compute on your encrypted memories without ever decrypting them.

Ephemeral memory options that automatically expire context after set timeframes, balancing personalization with privacy by design.

The question for professionals, developers, students, and creators isn’t whether to use AI with memory—it’s how to do it securely and effectively while maintaining control.

Making Secure AI Memory Work for You: Practical Implementation

Let’s end with actionable steps you can implement today to leverage secure AI memory for personalized AI chats without compromising your privacy:

Start with compartmentalization. Create distinct projects for different contexts. Professional work in one space, personal exploration in another, sensitive research in a third. This limits exposure if any single context is compromised.

Build your memory layer gradually. Don’t dump your entire life story into an AI on day one. Start with basic preferences and expand as you understand how the system uses that information.

Regularly audit what’s remembered. Set a monthly reminder to review what your AI knows about you. Delete outdated information. Correct inaccurate inferences. Think of it like clearing browser cookies—digital hygiene.

Use custom API keys when handling sensitive data. If you’re working with confidential client information or proprietary business data, the investment in API keys and encrypted channels pays for itself in risk reduction.

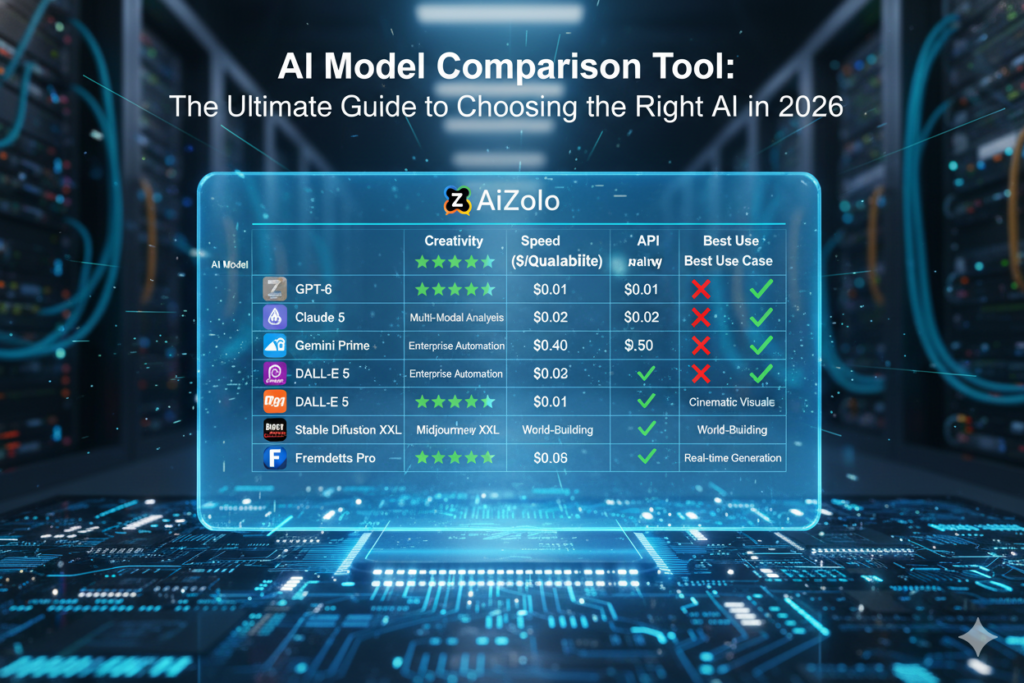

Test different models with the same memory. This is particularly powerful in multi-model platforms. See how Claude interprets your context versus ChatGPT versus Gemini. You’ll quickly learn which model serves which purpose best with your specific information.

Document your context explicitly. Don’t rely on AI to infer what you mean. Create clear, written context documents for each project: goals, constraints, preferences, terminology. Explicit beats implicit every time.

Set up “memory checkpoints.” Periodically ask your AI, “What do you remember about this project?” Review the summary for accuracy. This catches both gaps and incorrect assumptions before they multiply.

Your Next Step

The conversation about secure AI memory for personalized AI chats is just beginning. As these tools become more integrated into our daily workflows, the stakes—both in terms of productivity gains and privacy risks—continue rising.

The professionals who will thrive in this AI-augmented world aren’t necessarily those with access to the most powerful AI models. They’re the ones who understand how to build secure, persistent memory systems that amplify their effectiveness without compromising their data.

Whether you’re a developer building the next breakthrough SaaS product, a marketer managing multiple client brands, a student conducting research across semester-long projects, or a founder wearing every hat in your startup—the question isn’t if you need AI with memory. It’s how you implement it securely and effectively.

Explore more insights on how to work smarter with AI on the AiZolo blog, where we break down practical AI strategies for real-world workflows.

Ready to experience truly personalized AI without the privacy nightmare? Start building smarter with AiZolo and see how project-based memory transforms your AI interactions across multiple models—all from one secure workspace.

The future of AI isn’t just about better models. It’s about better memory. Secure memory. Your memory.

And it starts with understanding that you don’t have to choose between personalization and privacy—not anymore.

Suggested Internal Links

- Link “multi-model comparison” to: Testing AI Output Across Multiple Models: A Multi-Model AI Comparison Guide

- Link “all-in-one AI workspace” to: The Shocking All-in-One AI Subscription Revolution

- Link “project-based organization” to: AI Tools for Creators All in One Platform

- Link “API key integration” to: AI Subscription Bundles: The Smart Solution

Suggested External Links

- Stanford Study on AI Chatbot Privacy Concerns – Authoritative research on privacy risks

- Mem0 – Memory Layer for AI Apps – Technical implementation resource for developers

- IAPP: Chatbot Privacy Discussion – Industry perspective on regulatory landscape

- TechTarget: AI Chatbot Privacy Concerns – Enterprise security best practices