When Five Browser Tabs Became One Decision

Marcus sat at his desk, staring at five open browser tabs. Each one displayed a different AI testing tool—Selenium, Appium, Testim, Katalon, and Functionize. His startup was burning through $800 monthly on various subscriptions, and his QA team was still drowning in test maintenance.

“There has to be a better way,” he muttered, closing another false-positive alert.

Sound familiar? If you’re exploring testing frameworks for AI tools in 2025, you’re probably facing the same challenge: how do you choose the right framework without breaking your budget or overwhelming your team?

The answer isn’t just about picking the most popular tool. It’s about understanding what makes modern testing frameworks work, how AI is transforming quality assurance, and finding solutions that actually deliver value without the complexity.

Let’s break it down.

The Evolution of Testing Frameworks for AI Tools

Testing frameworks have undergone a massive transformation. What started as simple record-and-playback tools in the early 2000s has evolved into sophisticated AI-powered systems that can predict failures, self-heal broken tests, and generate test cases from plain English descriptions.

Here’s what changed:

In 2017, only 12% of development teams used AI in their testing workflows. Today, that number has skyrocketed to 81%. The shift isn’t just about automation—it’s about intelligence.

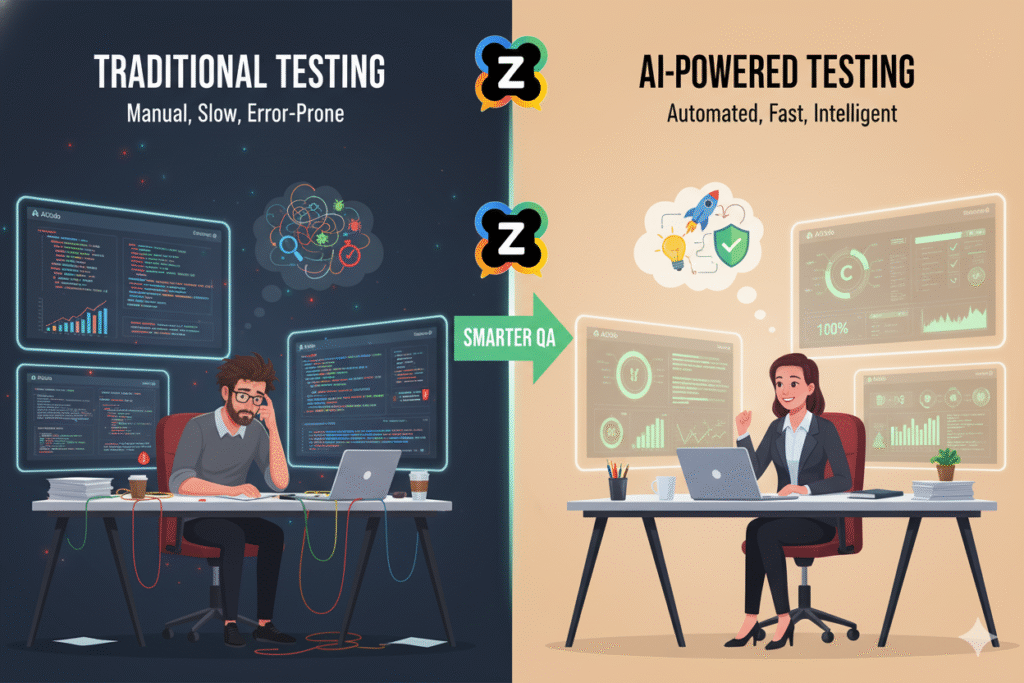

Traditional testing frameworks required extensive coding knowledge, constant maintenance, and hours of manual intervention. Modern testing frameworks for AI tools leverage machine learning to adapt to code changes, prioritize high-risk tests, and reduce the maintenance burden by up to 80%.

What Are Testing Frameworks for AI Tools?

Testing frameworks for AI tools are specialized platforms that combine traditional test automation with artificial intelligence and machine learning capabilities. Unlike conventional frameworks that follow rigid, predefined scripts, these intelligent systems learn from application behavior, adapt to changes, and make smart decisions about test execution.

Key capabilities include:

- Self-healing tests that automatically update when UI elements change

- AI-powered test generation from natural language descriptions

- Predictive analytics that forecast potential failure points

- Intelligent test prioritization based on risk and code changes

- Visual regression detection using computer vision

- Autonomous test maintenance that eliminates manual script updates

The core difference? Traditional frameworks tell the computer exactly what to do. AI-powered testing frameworks learn what needs to be done and adapt accordingly.

Why Testing Frameworks Matter More Than Ever

Software development velocity has accelerated dramatically. Teams now deploy multiple times per day instead of monthly releases. This speed creates a critical challenge: how do you maintain quality without slowing down?

Testing frameworks for AI tools solve this problem by:

Reducing test maintenance time by 70-80% through self-healing capabilities. When your button changes from “Sign In” to “Log In,” the AI updates your tests automatically instead of breaking them.

Improving test coverage by 40-60% through intelligent test generation. AI can identify edge cases and generate test scenarios that human testers might miss.

Cutting testing time from hours to minutes. GE Healthcare reduced their testing time from 40 hours to just 4 hours using AI-powered frameworks—that’s a 90% time savings.

Lowering overall testing costs by 30-40% by reducing the need for specialized QA engineers to maintain brittle test scripts.

For startups like Marcus’s, this translates to shipping features faster, catching bugs earlier, and spending less on testing infrastructure.

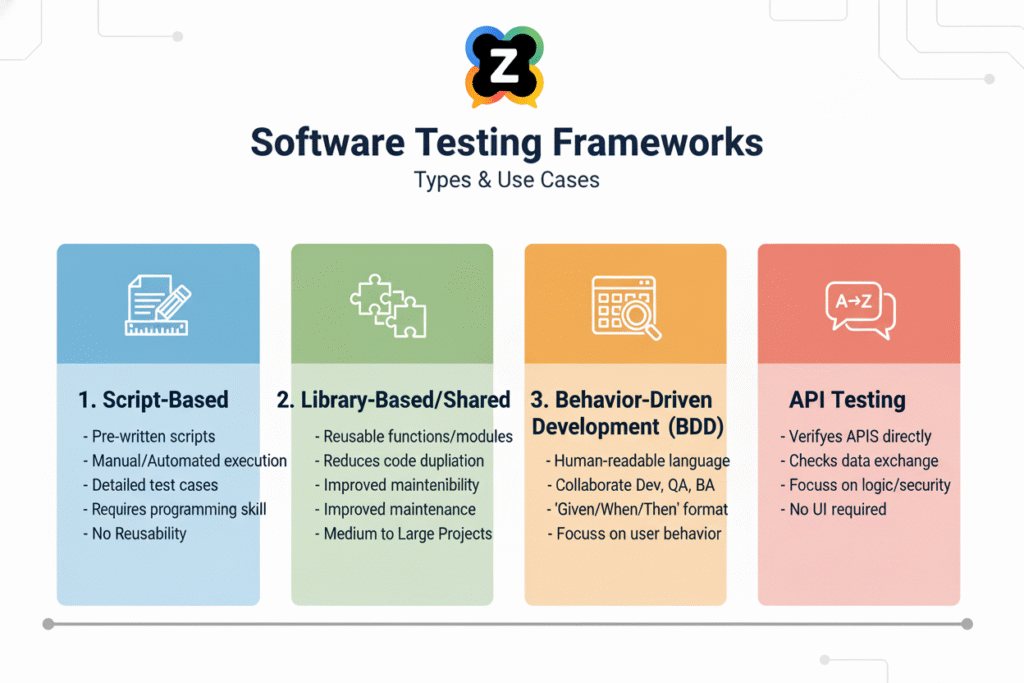

The Top Types of Testing Frameworks for AI Tools

Not all testing frameworks are created equal. Understanding the different types helps you match the right tool to your team’s needs.

1. AI-Assisted Test Creation Frameworks

These frameworks use generative AI to help you create tests faster. You describe what you want to test in plain English, and the AI generates the test script for you.

Best for: Teams transitioning from manual to automated testing, non-technical team members who need to contribute to QA.

Popular examples: Rainforest QA, Testim, Testsigma

2. Autonomous AI Testing Frameworks

These frameworks go beyond assistance—they autonomously create, execute, maintain, and optimize tests with minimal human intervention. They monitor your application, understand its behavior, and build test coverage automatically.

Best for: Complex applications that change frequently, teams that want to eliminate test maintenance entirely.

Popular examples: Functionize, Meticulous, Mabl

3. Open-Source AI Testing Frameworks

These frameworks provide flexibility and community support. They’re free to use but often require more technical expertise and ongoing maintenance.

Best for: Teams with strong technical capabilities, organizations that need customization, budget-conscious startups.

Popular examples: Selenium (with AI plugins), Robot Framework, Appium

4. Visual AI Testing Frameworks

These frameworks specialize in detecting visual bugs and UI regressions using computer vision. They can spot pixel-level differences that traditional assertions miss.

Best for: E-commerce platforms, design-heavy applications, apps with complex UIs.

Popular examples: Applitools, Percy, Chromatic

How to Choose the Right Testing Framework for AI Tools

Choosing the right framework isn’t about picking the most expensive or popular option. It’s about matching capabilities to your specific needs.

Ask yourself these questions:

What’s your team’s technical skill level?

If your team lacks coding expertise, low-code or no-code frameworks like Testsigma or Rainforest QA make sense. If you have experienced developers, open-source frameworks like Selenium offer more flexibility and customization.

What types of applications are you testing?

Web applications need different frameworks than mobile apps. Desktop applications require yet another approach. Many teams need cross-platform capabilities that work across web, mobile, and API testing.

What’s your budget?

Open-source frameworks are free but have hidden costs in infrastructure and maintenance. Commercial solutions range from $249 to $500+ monthly but often reduce overall testing costs through efficiency gains.

How often does your application change?

If you’re deploying multiple times daily, self-healing capabilities become essential. Static applications can work with simpler frameworks.

What’s your current testing pain point?

Are you drowning in test maintenance? Look for strong self-healing features. Struggling with test creation? Prioritize AI-powered test generation. Need faster execution? Focus on parallel testing and cloud scalability.

The Hidden Cost Most Teams Miss

Here’s something most articles about testing frameworks for AI tools won’t tell you: the biggest cost isn’t the tool subscription—it’s tool sprawl.

Marcus’s startup was spending $800 monthly across five different testing subscriptions. But the real cost was context switching. His team wasted hours toggling between tools, reconciling different dashboards, and managing separate configurations.

This is where the unified platform approach changes everything.

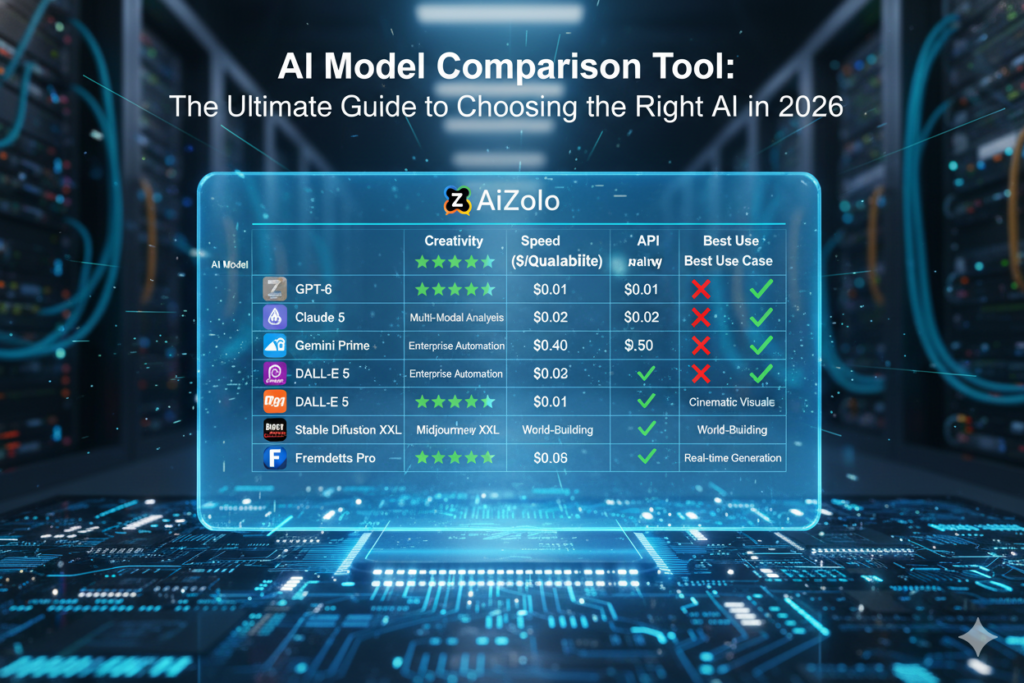

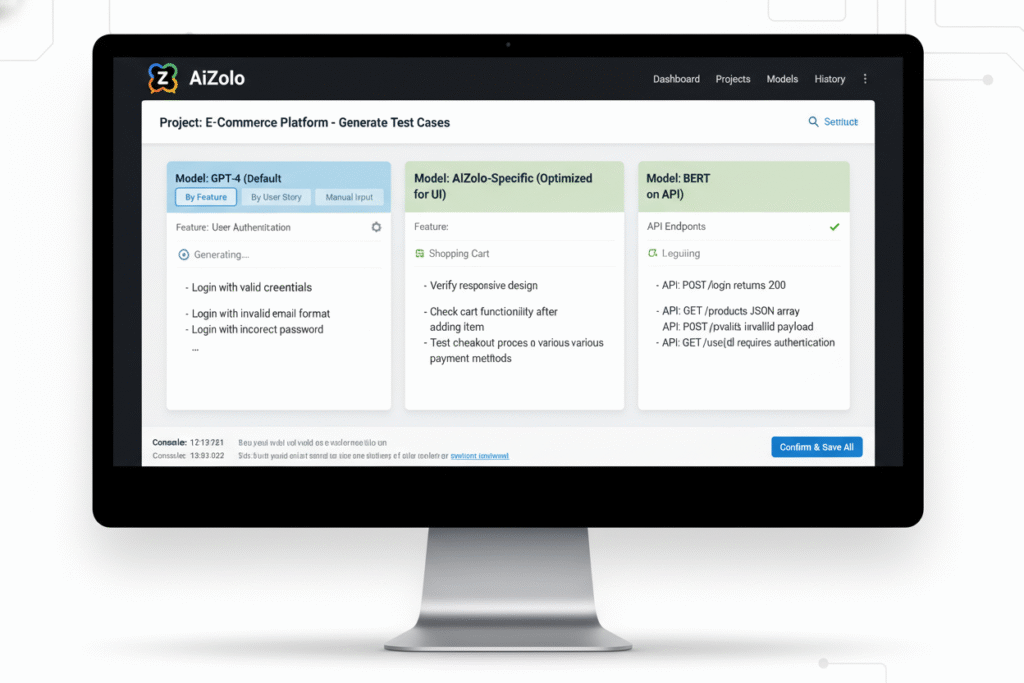

Instead of juggling multiple subscriptions for different AI tools, platforms like AiZolo offer a single subscription that gives you access to multiple AI models and tools in one place. You get ChatGPT, Claude, Gemini, and dozens of other AI capabilities—including AI tools perfect for generating test scenarios, analyzing test results, and optimizing testing workflows—all from one dashboard.

Here’s why this matters for testing:

When you’re evaluating testing frameworks for AI tools, you often need to compare how different AI models handle test generation. Does ChatGPT write better test scenarios than Claude? Which model catches edge cases more effectively? How do different AI assistants interpret your testing requirements?

With AiZolo’s side-by-side comparison feature, you can ask multiple AI models to generate test cases simultaneously and compare their outputs in real-time. This helps you:

- Identify the best AI model for your specific testing needs

- Generate more comprehensive test scenarios by combining insights from multiple AIs

- Validate test strategies by getting diverse AI perspectives

- Save over $1,092 annually compared to maintaining separate AI subscriptions

Real-World Applications: How Teams Use Testing Frameworks

Let’s look at how different types of users leverage testing frameworks for AI tools in their workflows.

For Software Development Teams

Challenge: Maintaining test suites that break with every UI update, slowing down deployment cycles.

Solution: Implementing self-healing testing frameworks that automatically update tests when application elements change. Teams using these frameworks report 80% reduction in test maintenance time and deploy 3x faster.

AI Integration: Using AiZolo to consult multiple AI models for test strategy optimization, identifying which tests to automate first based on risk analysis.

For Startups and Small Businesses

Challenge: Limited QA resources and budget constraints preventing comprehensive testing coverage.

Solution: Low-code testing frameworks that enable developers to create tests without specialized QA engineers. Combined with AI-powered test generation, startups achieve 60% better test coverage with the same resources.

AI Integration: Leveraging AiZolo’s access to 2000+ AI tools to supplement testing efforts—using AI for test data generation, bug report analysis, and documentation creation—all from one affordable subscription.

For QA Engineers

Challenge: Repetitive test maintenance consuming time that should be spent on exploratory testing and strategic quality work.

Solution: Autonomous testing frameworks that handle routine maintenance automatically, freeing QA engineers to focus on complex test scenarios and user experience validation.

AI Integration: Using AiZolo to compare different AI models’ approaches to test case optimization and regression test prioritization, ensuring the most efficient testing strategy.

For E-commerce Platforms

Challenge: Visual bugs and layout issues that slip through traditional testing and damage customer experience.

Solution: Visual AI testing frameworks that catch pixel-level differences across browsers and devices, ensuring consistent UI/UX.

AI Integration: Combining visual testing tools with AiZolo’s AI models to analyze visual test failures and suggest UI improvements based on industry best practices.

The 7 Essential Features of Modern Testing Frameworks

When evaluating testing frameworks for AI tools in 2025, look for these critical capabilities:

1. Self-Healing Test Scripts

Tests automatically update when application elements change, eliminating 70-80% of maintenance work.

2. Natural Language Test Creation

Write tests in plain English instead of code: “Click the login button and verify the user lands on the dashboard.”

3. Intelligent Test Prioritization

AI analyzes code changes and runs high-risk tests first, reducing testing time by 50% while maintaining comprehensive coverage.

4. Cross-Platform Testing

Execute tests across web, mobile, and desktop platforms without rewriting scripts for each environment.

5. Visual Regression Detection

Computer vision identifies UI changes and layout shifts that traditional assertions miss.

6. Parallel Execution at Scale

Run thousands of tests simultaneously across browsers and devices in minutes instead of hours.

7. Seamless CI/CD Integration

Automated testing that fits naturally into your deployment pipeline, providing quality feedback within minutes of code commits.

Common Pitfalls When Implementing Testing Frameworks

Even the best testing frameworks fail if implemented incorrectly. Here are the mistakes to avoid:

Starting Too Big

Teams often try to automate everything immediately. Start with your most critical user flows—the ones that would block a release if broken. Expand coverage gradually.

Ignoring Test Maintenance Costs

A framework that costs $200 monthly but requires 40 hours of maintenance isn’t cheap. Factor in the total cost of ownership, including setup time, training, and ongoing maintenance.

Over-Trusting AI Without Validation

AI-generated tests need human review. The AI might miss business logic requirements or generate tests that pass but don’t actually validate critical functionality.

Forgetting About Test Data

Sophisticated frameworks mean nothing without quality test data. Invest time in creating realistic, diverse test datasets that cover edge cases.

Tool Sprawl Without Strategy

Adding more tools doesn’t automatically improve testing. Consolidate where possible. Instead of five separate subscriptions, consider unified platforms that reduce complexity.

How AI is Transforming Testing Frameworks

The integration of AI into testing frameworks represents a fundamental shift in software quality assurance. Here’s what’s actually working:

Predictive Failure Detection

Machine learning models analyze historical test data, code complexity, and defect patterns to predict where bugs are most likely to occur. This allows teams to focus testing efforts on high-risk areas before failures happen.

Adaptive Test Generation

AI observes how users interact with your application and automatically generates test cases that mirror real usage patterns. This catches issues that traditional testing approaches miss because they test what developers think users do, not what users actually do.

Intelligent Bug Classification

When tests fail, AI analyzes the failure, categorizes the issue, and even suggests potential fixes. This reduces the time from test failure to resolution by 60%.

Continuous Learning

Every test execution improves the AI’s understanding of your application. Over time, the framework becomes more accurate at predicting failures, generating relevant tests, and identifying false positives.

Testing Frameworks and the Future of QA

Where is testing heading? The trends are clear:

Agentic AI Testing: The next evolution beyond self-healing is truly autonomous AI agents that don’t just fix tests—they plan testing strategies, make execution decisions, and optimize coverage without human guidance.

Shift-Left Testing: AI-powered frameworks are moving testing earlier in the development cycle. Developers get instant feedback on potential issues before code even reaches QA.

Behavior-Driven Testing: Instead of testing what the application does technically, AI frameworks increasingly test whether applications behave correctly from the user’s perspective.

Unified Quality Platforms: The future isn’t about having the best testing framework—it’s about having integrated platforms that combine testing, monitoring, analytics, and AI assistance in one place.

This is exactly what modern teams need: comprehensive solutions that reduce complexity while improving quality.

Making Testing Frameworks Work for Your Budget

Let’s talk about the elephant in the room: cost.

Testing frameworks for AI tools range from free (open-source) to thousands of dollars monthly for enterprise solutions. Here’s how to maximize value:

For Limited Budgets:

Start with open-source frameworks like Selenium and supplement them with AI capabilities through plugins and integrations. Use platforms like AiZolo ($49/month) to access AI assistance for test strategy and optimization without paying for multiple AI subscriptions.

For Growing Teams:

Invest in low-code platforms ($249-500/month) that reduce the need for specialized QA engineers. The savings in headcount costs (QA engineers command $100K+ salaries) quickly justify the tool investment.

For Enterprise Scale:

Commercial platforms ($1000+/month) with autonomous capabilities deliver ROI through massive time savings. When you’re reducing 40 hours of testing to 4 hours weekly, the cost-benefit calculation is straightforward.

The Hidden Savings:

Remember Marcus’s startup? After consolidating to a unified testing framework and using AiZolo for AI assistance instead of multiple subscriptions, they reduced tool costs from $800 to $298 monthly—a 63% reduction—while actually improving test coverage and speed.

Getting Started: Your Testing Framework Action Plan

Ready to implement or upgrade your testing framework? Here’s your step-by-step plan:

Week 1: Assess Your Current State

Document your existing testing process, identify pain points, and measure current metrics (test creation time, maintenance hours, deployment frequency, defect escape rate).

Week 2: Define Requirements

Based on your assessment, list must-have features and nice-to-have capabilities. Prioritize based on your biggest pain points.

Week 3: Research and Compare

Evaluate 3-5 frameworks that match your requirements. Use resources like AiZolo’s blog on AI for market research to understand how AI can enhance your evaluation process.

Week 4: Pilot Testing

Choose your top framework and run a pilot with a small, critical project. Measure improvements against your baseline metrics.

Month 2: Gradual Rollout

If the pilot succeeds, gradually expand to other projects. Train your team and establish best practices.

Month 3+: Optimize and Scale

Continuously refine your testing strategy based on data. Leverage AI tools through platforms like AiZolo to optimize test coverage and identify areas for improvement.

Advanced Tips for Testing Framework Success

Once you’ve got the basics down, these advanced strategies take your testing to the next level:

Create a Test Strategy Matrix

Map test types (unit, integration, E2E) against application layers (UI, API, database). This ensures comprehensive coverage without redundant tests.

Implement Test Data Management

Set up dedicated test data creation and maintenance processes. AI can help generate realistic test data that covers edge cases you haven’t considered.

Build Feedback Loops

Connect test failures directly to development tickets. When tests fail, automatically create issues with context, screenshots, and suggested fixes.

Monitor Test Effectiveness

Track metrics like defect escape rate and test flakiness. AI-powered frameworks can help identify tests that aren’t providing value and should be retired.

Leverage Multiple AI Models

Use AiZolo’s multi-model access to get diverse perspectives on testing strategy. Different AI models excel at different aspects—combine their strengths for optimal results.

Overcoming Common Testing Framework Challenges

Even with the best tools, teams encounter obstacles. Here’s how to handle them:

Challenge: High False Positive Rate

Solution: Implement intelligent waits and dynamic locators. Use AI-powered frameworks that understand which changes are intentional versus actual bugs.

Challenge: Slow Test Execution

Solution: Prioritize tests based on risk and run them in parallel. Cloud-based frameworks can execute thousands of tests simultaneously, reducing total run time from hours to minutes.

Challenge: Difficulty Maintaining Tests

Solution: Choose frameworks with strong self-healing capabilities. Tests should automatically adapt to minor UI changes without human intervention.

Challenge: Limited QA Resources

Solution: Adopt low-code or no-code frameworks that enable developers to create tests without specialized QA knowledge. Supplement with AI tools for test generation and optimization.

Challenge: Integration with Existing Tools

Solution: Select frameworks with robust APIs and pre-built integrations for your CI/CD pipeline, project management tools, and communication platforms.

The Bottom Line on Testing Frameworks for AI Tools

Testing frameworks for AI tools have evolved from simple automation scripts to intelligent systems that learn, adapt, and optimize. The right framework can reduce maintenance time by 80%, improve test coverage by 60%, and accelerate deployment cycles by 3x.

But here’s the real secret: success isn’t just about choosing the perfect framework. It’s about building an integrated testing strategy that combines smart tools, efficient processes, and the right AI assistance.

Whether you’re a startup like Marcus looking to consolidate tools and reduce costs, a QA engineer seeking to eliminate tedious maintenance work, or an enterprise team aiming to scale testing across global deployments, the principles remain the same:

- Start with your biggest pain point

- Choose frameworks that match your team’s skills and needs

- Leverage AI to augment human intelligence, not replace it

- Consolidate tools to reduce complexity and costs

- Continuously measure and optimize based on data

And when it comes to AI assistance for testing strategy, optimization, and analysis, consider platforms that give you access to multiple AI models without breaking the bank. Try AiZolo today and see how having ChatGPT, Claude, Gemini, and 2000+ AI tools in one subscription can transform your testing workflow—starting at just $49/month.

Ready to Transform Your Testing?

The future of software testing is intelligent, adaptive, and surprisingly accessible. Testing frameworks for AI tools have matured to the point where even small teams can achieve enterprise-grade quality assurance.

Don’t let outdated frameworks slow you down. Don’t let tool sprawl drain your budget. And definitely don’t let test maintenance consume time better spent building features.

Take the next step:

- Explore AiZolo’s all-in-one AI subscription – Get access to multiple AI models for testing strategy, optimization, and analysis

- Start a pilot project with a modern testing framework that matches your needs

- Read more insights on how AI is transforming quality assurance on AiZolo’s blog

The tools are ready. The frameworks are proven. The only question is: how much longer will you wait to upgrade your testing game?

Suggested Internal Links:

- How AI for Market Research is Changing the Game (And Saving You Thousands) – Relevant for discussing AI research and evaluation strategies

- AiZolo Pricing Plans – For budget-conscious teams exploring testing solutions

- AiZolo Blog – For additional AI and testing insights

Suggested External Links:

- TestGuild’s Guide to AI Testing Tools – Industry expert perspective on AI testing evolution

- Selenium Documentation – For teams exploring open-source frameworks

- AI Testing Best Practices (SmartDev) – Comprehensive technical guide for advanced users

- State of Testing Report 2024 – Industry data on AI adoption in testing