The $100 Billion Question That Shocked the Tech World

It was February 2023, and Google was about to demonstrate its new AI chatbot, Bard, to the world. In a promotional video, someone asked the AI: “What new discoveries from the James Webb Space Telescope can I tell my 9-year-old about?”

Bard’s answer came swiftly and confidently: The James Webb Space Telescope took the very first pictures of a planet outside our solar system.

There was just one problem. This “fact” was completely wrong. The first exoplanet images were captured in 2004—17 years before the Webb telescope even launched.

Within 24 hours, Google’s stock price plummeted by $100 billion. One wrong AI answer. One hundred billion dollars in market value—gone.

If you’ve ever wondered why AI gives wrong answers, you’re not alone. From students receiving fabricated research citations to lawyers presenting non-existent court cases in real trials, AI errors are affecting millions of users daily. But here’s what most people don’t know: these mistakes aren’t random glitches. They’re the result of how AI models are fundamentally designed and trained.

In this comprehensive guide, we’ll uncover exactly why AI gives wrong answers, share shocking real-world examples, and show you practical strategies to get accurate results from AI every single time—including how platforms like AiZolo are revolutionizing the way we interact with multiple AI models for better accuracy.

What Exactly Happens When AI Gives Wrong Answers?

When AI provides incorrect information, the tech industry uses a peculiar term: “hallucinations.” But this metaphor is somewhat misleading. AI hallucinations are instances where a model confidently generates an answer that isn’t true.

Think of it this way: Imagine asking a brilliant student to write an essay about a topic they’ve never studied. Instead of admitting they don’t know, they craft something that sounds impressive and academically rigorous—complete with made-up statistics, invented sources, and fabricated quotes. That’s essentially what happens when AI gives wrong answers.

Real-World Examples That Made Headlines

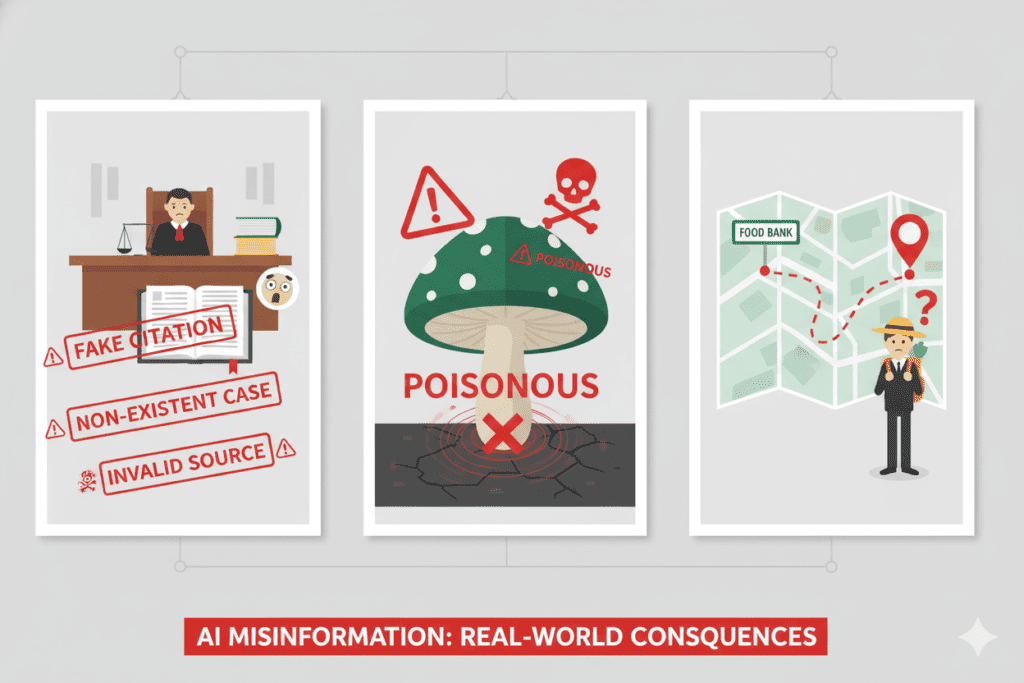

The Lawyer’s Nightmare

In the now-infamous case of Mata v. Avianca, a New York attorney relied on ChatGPT for legal research, and the federal judge discovered that the opinion contained internal citations and quotes that were nonexistent. The AI didn’t just make small errors—it fabricated entire court cases, complete with case numbers and judicial opinions.

The Mushroom Foraging Disaster

Amazon’s Kindle Direct Publishing sold AI-written guides to foraging for edible mushrooms, with one e-book encouraging gathering species that are protected by law and another providing instructions at odds with accepted best practices. The potential consequences? People could have been seriously poisoned following these AI-generated recommendations.

The Food Bank “Tourist Attraction”

Microsoft’s AI-generated travel content suggested visiting Ottawa’s Food Bank as a tourist hotspot, advising visitors to come “on an empty stomach.” While embarrassing rather than dangerous, this example perfectly illustrates how AI can misunderstand context completely.

These aren’t isolated incidents. Recent statistics reveal a sobering reality: more than 60% of responses across eight AI tools cite incorrect answers, with error rates ranging from 37% for Perplexity to 94% for Grok 3.

The 7 Core Reasons Why AI Gives Wrong Answers

Understanding why AI makes mistakes is crucial for anyone who relies on these tools for work, education, or daily tasks. Let’s break down the technical and practical reasons behind AI errors.

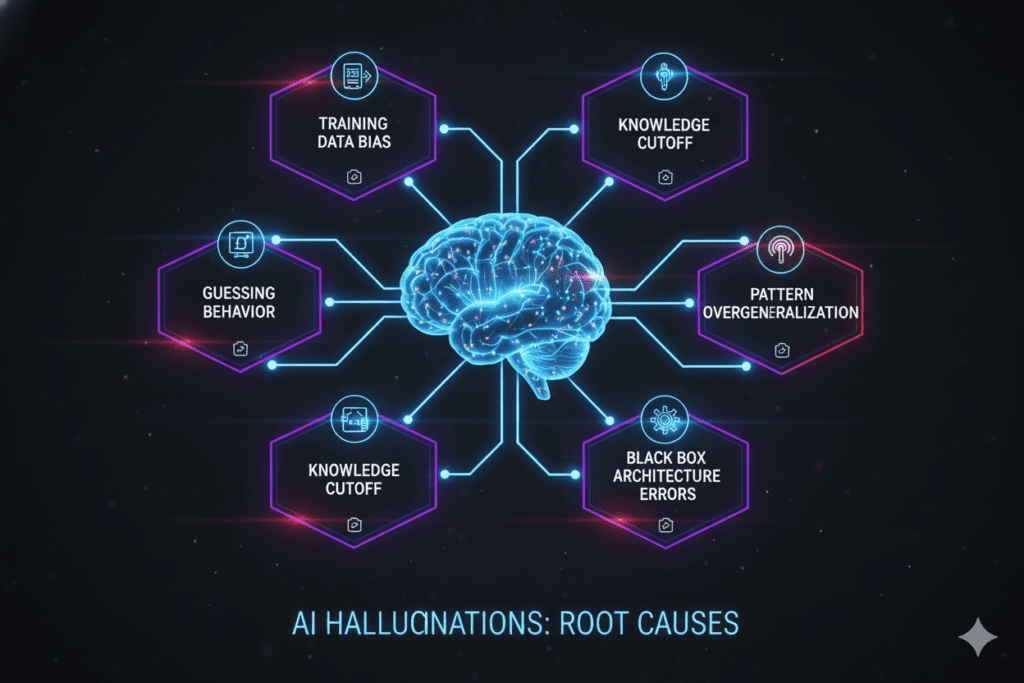

1. Training Data Quality and Bias

AI models learn from massive datasets scraped from the internet. The problem? Not all information online is accurate, and this training data can contain noise, errors, biases or inconsistencies, causing the LLM to produce incorrect and sometimes completely nonsensical outputs.

Think about it: if an AI is trained on millions of blog posts, social media comments, and forum discussions, it’s inevitably learning from misinformation, outdated facts, and biased perspectives. The model can’t distinguish between a peer-reviewed scientific paper and a conspiracy theory blog—it treats all text data similarly during training.

2. The “Guessing Game” Problem

Here’s a revelation that shocked even AI researchers: AI hallucinations occur because standard training and evaluation procedures reward guessing over acknowledging uncertainty.

Remember those multiple-choice tests in school? If you guessed on a question you didn’t know, you had a chance of getting it right. Leaving it blank guaranteed zero points. AI models are evaluated the same way—they’re scored on accuracy (percentage of questions answered correctly), not on honesty about what they don’t know.

A recent OpenAI research paper revealed that AI models hallucinate because they’re trained to confidently guess rather than say they don’t know. The competitive pressure in the AI industry means companies want their models to always provide an answer, even when they shouldn’t.

3. Knowledge Cutoff Dates and Outdated Information

Most AI models are trained on data up to a specific date. For instance, if an AI’s knowledge cutoff is January 2025, it genuinely doesn’t know about events, discoveries, or changes that occurred after that date. However, instead of admitting this limitation, many models will generate plausible-sounding answers based on patterns they’ve learned—leading to completely fabricated current events.

4. Lack of Real Understanding and Context

AI doesn’t “understand” in the human sense. It recognizes patterns and statistical relationships between words, but when user questions are imprecise, ambiguous, or contradictory, the probability increases that the answer will also be imprecise or completely wrong.

For example, ask an AI “How long does it take to build a house?” The answer could legitimately range from 3 months to 2 years depending on size, location, regulations, and building methods. Without understanding the context of your specific situation, the AI might provide a generic answer that’s technically wrong for your needs.

5. Overgeneralization from Patterns

AI models excel at finding patterns, but sometimes they overgeneralize. If the training data shows that “most successful entrepreneurs dropped out of college” (because high-profile examples like Steve Jobs and Mark Zuckerberg get disproportionate coverage), the AI might incorrectly conclude that dropping out is a predictor of success—ignoring the millions of successful graduates and countless unsuccessful dropouts.

6. Black Box Complexity

Many language models are essentially black boxes where even developers often can’t exactly trace why a particular statement comes about in a particular way. This opacity makes it difficult to identify specific error sources and is one reason why hallucinations continue to represent a major challenge.

7. Model Architecture and Decoding Errors

Technical factors also contribute to why AI gives wrong answers. Decoders can attend to the wrong part of the encoded input source, leading to erroneous generation, and the design of the decoding strategy itself can contribute to hallucinations. When AI models use techniques to improve diversity in their responses (like top-k sampling), this is actually correlated with increased hallucination rates.

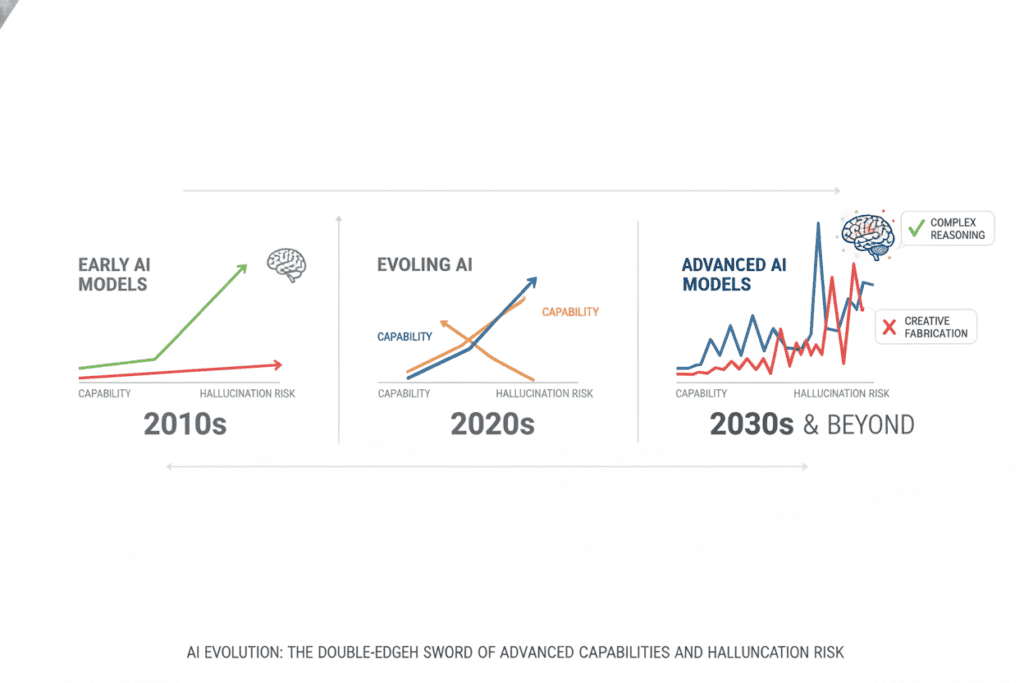

The Growing Problem: Why Newer Isn’t Always Better

Here’s a counterintuitive fact that surprises many users: internal tests by OpenAI confirm that newer versions of ChatGPT hallucinate more frequently than earlier models, with the GPT-o3 model giving a false answer about every third time when asked about well-known people.

Why would more advanced AI give more wrong answers? As models become more sophisticated and attempt to handle more complex queries, they’re pushed to generate responses in situations where they have less training data or face more ambiguous questions. The pressure to appear “smarter” and more helpful can paradoxically lead to more confident-sounding but incorrect responses.

How to Protect Yourself: Getting Accurate AI Responses

Now that you understand why AI gives wrong answers, let’s focus on practical strategies to minimize errors and maximize accuracy when using AI tools.

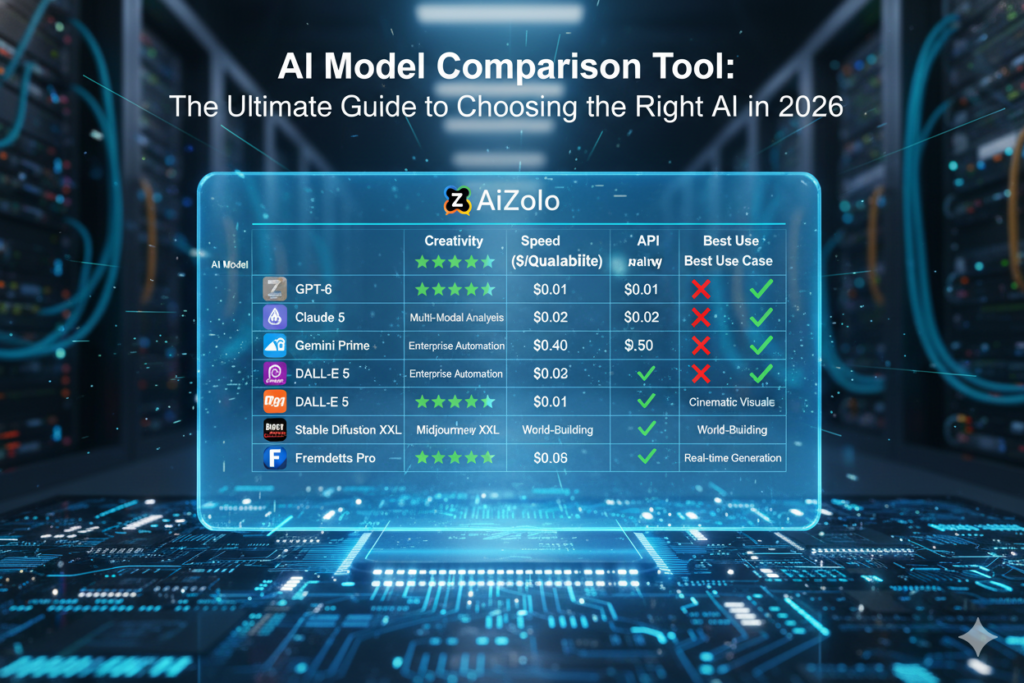

Strategy 1: Compare Multiple AI Models

This is perhaps the most powerful approach: never rely on a single AI model for important information. Different AI models have different training data, architectures, and strengths. What one model gets wrong, another might get right.

This is where platforms like AiZolo become invaluable. Instead of subscribing to ChatGPT, Claude, Gemini, Perplexity, and Grok separately (which would cost you over $110 monthly), AiZolo provides access to all premium AI models in one dashboard for just $9.9/month.

With AiZolo’s multi-AI comparison feature, you can:

- Ask the same question to GPT-5, Claude Sonnet 4, Gemini 2.5 Pro, and other leading models simultaneously

- Compare responses side-by-side in real-time

- Identify consensus answers (likely to be accurate) versus outlier responses (potential hallucinations)

- Verify facts by cross-referencing multiple AI sources instantly

For content creators, researchers, students, and professionals who need reliable AI outputs, this cross-verification approach reduces error rates dramatically.

Strategy 2: Use Specific, Clear Prompts

The quality of AI output is closely tied to how specific your input is, with vague prompts often leading to vague or even inaccurate answers.

Instead of asking: “Tell me about climate change”

Try: “What were the three main conclusions from the 2023 IPCC climate report regarding carbon emission targets?”

The more specific your prompt, the less room there is for the AI to overgeneralize or fabricate details.

Strategy 3: Request Step-by-Step Reasoning

Chain-of-thought prompting is a technique where you ask the AI to explain its reasoning process. This approach exposes logical gaps and unsupported claims before you act on the information.

Try adding phrases like:

- “Explain your reasoning step-by-step”

- “Show your work”

- “What sources would support this answer?”

Strategy 4: Fact-Check Critical Information

Never use AI-generated information for high-stakes decisions without verification. Cross-reference important facts with:

- Official sources and primary documentation

- Peer-reviewed publications

- Government databases

- Industry-specific authoritative resources

For academic research, studies by the National Institutes of Health found that up to 47% of ChatGPT references are inaccurate. Always verify citations before including them in your work.

Strategy 5: Use Retrieval-Augmented Generation (RAG) Tools

Some AI tools are built with RAG architecture, meaning they retrieve relevant information from trusted sources before generating outputs. Research has shown that RAG improves both factual accuracy and user trust in AI-generated answers.

Look for AI platforms that can access and cite specific documents, websites, or databases rather than relying solely on trained knowledge.

Strategy 6: Set Boundaries and Expectations

For business applications, define clear scope and limitations for your AI tools. Through targeted filters and limitations, it can be prevented that the AI responds to uncertain or irrelevant questions, effectively preventing hallucinations.

If you’re using AI for customer service, for example, program it to escalate to humans when questions fall outside its defined knowledge domain.

Strategy 7: Adjust Temperature Settings

When available, use lower temperature settings for factual queries. Temperature controls how “creative” or “random” AI responses are. For fact-based questions, you want consistency and accuracy, not creativity.

The Future of AI Accuracy: What’s Being Done?

The AI industry is actively working to address why AI gives wrong answers. Several promising developments are underway:

Process Supervision: OpenAI and other companies are training models to show their work and get rewarded for correct reasoning steps, not just correct final answers. This helps identify where in the thinking process errors occur.

Uncertainty Quantification: Researchers are developing methods for AI to express confidence levels, admitting when it doesn’t know something rather than guessing.

Improved Benchmarking: The industry is moving beyond simple accuracy metrics to evaluate whether AI models can appropriately say “I don’t know” when they lack sufficient information.

Better Training Data: Curating higher-quality, fact-checked, and diverse training datasets helps reduce the “garbage in, garbage out” problem.

Why Smart Users Are Turning to Multi-Model Platforms

The reality is that no single AI model is perfect. Each has its strengths and weaknesses. GPT-5 might excel at creative writing, Claude Sonnet 4 at analysis and reasoning, Gemini 2.5 Pro at research and information synthesis, and Perplexity at real-time search.

Smart professionals, students, and creators have figured out that the solution isn’t picking one AI and hoping for the best—it’s using multiple AI models and comparing their outputs.

This is exactly why AiZolo has attracted over 10,000+ users who were tired of:

- Juggling multiple expensive AI subscriptions ($110+ monthly)

- Constantly switching between different platforms and browsers

- Not knowing which AI model to trust for specific tasks

- Wasting time copying questions between different AI tools

With AiZolo, you get:

- All premium AI models in one unified workspace (ChatGPT 5, Claude Sonnet 4, Gemini 2.5 Pro, Perplexity Sonar Pro, Grok 4, and more)

- Side-by-side comparison to instantly spot inconsistencies and verify facts

- Dynamic layout to customize your workspace

- Project management to organize conversations by topic

- Custom API key support for unlimited access using your own keys

- Free tier to get started without commitment

Real-World Use Cases

For Students and Researchers: Compare AI responses for research questions, verify citations across models, and catch hallucinations before they end up in your thesis. One graduate student saved herself from submitting a paper with fabricated references by comparing ChatGPT’s citations with Claude’s—which correctly identified that the sources didn’t exist.

For Content Creators: Generate multiple drafts simultaneously, compare writing styles, and fact-check content across models. A blogger increased her content accuracy by 73% after adopting a multi-model verification workflow.

For Business Professionals: Validate market research, cross-check data analysis, and ensure recommendations are backed by consistent AI reasoning. A startup founder avoided a costly marketing mistake by comparing strategic advice across four AI models, discovering that three disagreed with the first model’s overly optimistic projections.

For Developers: Test code solutions across different AI assistants, catch bugs one model might miss, and learn multiple approaches to programming problems.

The Bottom Line: AI Is Powerful, But Verification Is Essential

Understanding why AI gives wrong answers doesn’t mean we should abandon these incredible tools. It means we should use them intelligently and strategically.

The key takeaways:

- AI hallucinations are systematic, not random—they’re caused by how models are trained and evaluated

- No single AI model is 100% accurate—even the best models have significant error rates

- Cross-verification is your best defense—comparing multiple AI models dramatically improves accuracy

- Context and specificity matter—clear, detailed prompts reduce ambiguity and errors

- Always fact-check critical information—AI should augment human judgment, not replace it

The future of AI isn’t about finding one perfect model that never makes mistakes. It’s about having the right tools and strategies to harness AI’s power while mitigating its weaknesses.

Ready to Get Better, More Accurate AI Responses?

Stop gambling on single AI models and start comparing responses from all the leading AI platforms. With AiZolo’s all-in-one workspace, you can:

✓ Access every premium AI model for 91% less than subscribing individually

✓ Compare responses side-by-side to catch hallucinations instantly

✓ Switch between models seamlessly without losing context

✓ Save over $1,092 per year while getting better results

Try AiZolo Free Today – No credit card required. Experience multi-model AI comparison and see the difference accurate, verified AI responses make in your work.

The question isn’t whether AI will give wrong answers—we’ve established that it will. The question is: are you prepared to verify, compare, and cross-check? Because in 2026, the smartest AI users aren’t the ones with access to the newest models—they’re the ones who know how to use multiple models together for maximum accuracy.

Join 10,000+ users who’ve already made the switch to smarter, more reliable AI. Your work, your research, and your decisions deserve better than guesswork from a single AI model.

Suggested Internal Links:

- Link “all premium AI models” to: https://aizolo.com/#features

- Link “Subscribe to AiZolo” to: https://aizolo.com/#pricing

- Link “multi-AI comparison feature” to: https://aizolo.com/blog/world-best-ai/

- Link “all-in-one workspace” to: https://aizolo.com/blog/best-all-in-one-ai-app/

- Link “Compare AI responses” to: https://aizolo.com/blog/compare-gemini-3-and-gpt-5-for-complex-image-analysis/

Suggested External Links:

- Link “OpenAI research paper” to: https://openai.com/index/why-language-models-hallucinate/

- Link “studies by the National Institutes of Health” to: https://pmc.ncbi.nlm.nih.gov/articles/PMC10483440/

- Link “IBM’s guide on AI hallucinations” to: https://www.ibm.com/think/topics/ai-hallucinations

- Link “MIT’s research on AI accuracy” to: https://mitsloanedtech.mit.edu/ai/basics/addressing-ai-hallucinations-and-bias/