The artificial intelligence landscape has evolved dramatically over the past few years, transforming from a niche technological curiosity into an essential business tool that drives innovation across every industry. As organizations increasingly recognize AI’s potential to revolutionize operations, enhance customer experiences, and create competitive advantages, the challenge has shifted from whether to adopt AI to determining which AI solutions best fit specific needs. This is where AI comparison tools have become indispensable resources for businesses, developers, and technology enthusiasts seeking to navigate the complex ecosystem of artificial intelligence platforms, models, and services.

The proliferation of AI technologies has created an unprecedented variety of options, each with unique strengths, limitations, and ideal use cases. From large language models that power conversational interfaces to computer vision systems that enable autonomous vehicles, the diversity of AI solutions available today can be overwhelming. Decision-makers face the daunting task of evaluating numerous factors including performance metrics, pricing structures, integration capabilities, scalability potential, and ethical considerations. Without systematic approaches to comparison and evaluation, organizations risk making suboptimal choices that could impact their technological trajectory for years to come.

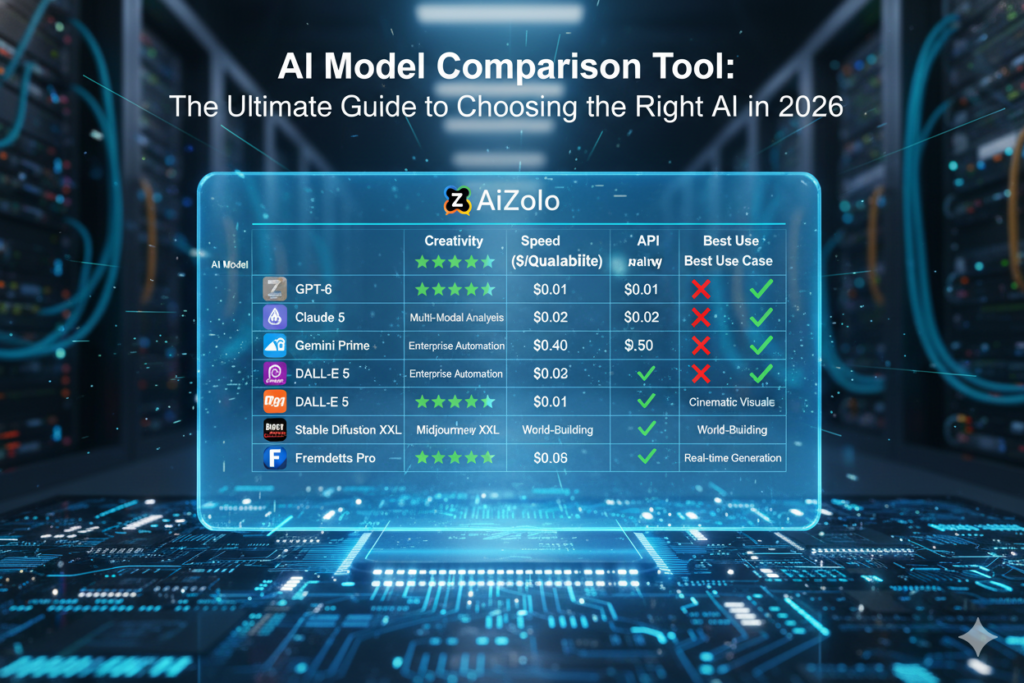

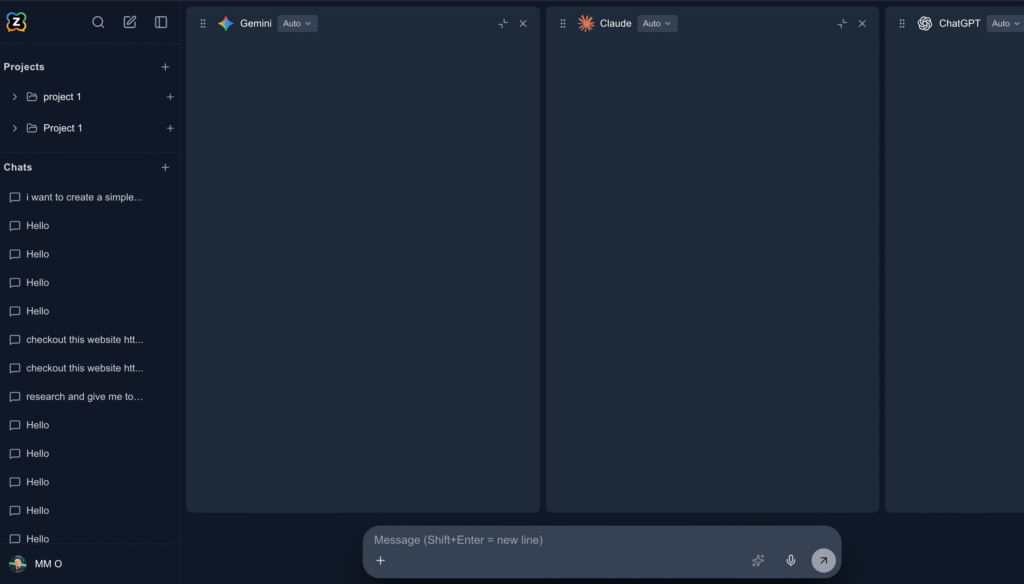

Try AiZolo for FREE for AI Comparison – AiZolo

Understanding the AI Comparison Landscape

The modern AI ecosystem encompasses a vast array of technologies, platforms, and services that serve different purposes across the technological spectrum. Natural language processing models enable machines to understand and generate human language with remarkable sophistication, while computer vision systems interpret visual information with accuracy that often surpasses human capabilities. Machine learning platforms provide the infrastructure for building custom models, and pre-trained AI services offer ready-to-deploy solutions for common business challenges. Each category contains numerous options from established technology giants, innovative startups, and open-source communities, creating a rich but complex marketplace of possibilities.

The evolution of AI comparison tools reflects the growing maturity of the artificial intelligence industry. Early adopters relied primarily on academic papers, vendor documentation, and limited benchmarking data to make decisions. Today’s comparison tools offer sophisticated features including real-time performance testing, cost calculators, integration assessment frameworks, and community-driven reviews. These platforms have democratized access to critical information, enabling even small organizations to make informed decisions about AI adoption. The transformation has been particularly significant in making technical specifications accessible to non-technical stakeholders, bridging the gap between engineering teams and business decision-makers.

Understanding the fundamental differences between AI models and platforms requires examining multiple dimensions of capability and constraint. Processing speed and accuracy represent obvious metrics, but equally important considerations include training data requirements, computational resource needs, deployment flexibility, and ongoing maintenance demands. Some AI solutions excel in specific domains but struggle with general-purpose applications, while others offer broad capabilities at the expense of specialized performance. The context of implementation significantly influences which characteristics matter most, making one-size-fits-all comparisons inadequate for meaningful evaluation.

Key Features of Effective AI Comparison Tools

Comprehensive AI comparison tools must address the multifaceted nature of artificial intelligence evaluation by providing robust features that enable thorough assessment across multiple dimensions. Performance benchmarking stands as a cornerstone feature, offering standardized tests that measure speed, accuracy, and efficiency across comparable tasks. These benchmarks should span various difficulty levels and use cases, providing insights into how different AI solutions perform under diverse conditions. Advanced comparison tools go beyond simple metrics to offer contextual analysis that explains why certain models excel in specific scenarios while struggling in others.

Cost analysis represents another critical component of effective comparison platforms. The total cost of ownership for AI solutions extends far beyond initial licensing fees to encompass infrastructure requirements, training expenses, ongoing maintenance, and potential scaling costs. Sophisticated comparison tools provide detailed cost modeling that accounts for usage patterns, growth projections, and hidden expenses that might not be immediately apparent. For organizations considering Aizolo’s AI comparison platform, the ability to perform side-by-side cost analyses across multiple vendors can reveal significant savings opportunities and help justify investment decisions to stakeholders.

Integration capabilities assessment has become increasingly important as organizations seek to incorporate AI into existing technology stacks. Effective comparison tools evaluate not just technical compatibility but also the effort required for implementation, the availability of documentation and support resources, and the potential for future interoperability challenges. This includes examining API design quality, SDK availability, programming language support, and compliance with industry standards. The best comparison platforms provide practical insights into integration complexity, helping teams estimate implementation timelines and resource requirements accurately.

User experience and interface design considerations often receive insufficient attention in AI comparisons, yet they significantly impact adoption success and long-term satisfaction. Comparison tools should evaluate the quality of user interfaces, the intuitiveness of configuration options, and the accessibility of advanced features. This extends to examining developer experiences through API design, documentation quality, and debugging capabilities. Organizations benefit from understanding how different AI solutions balance power with usability, particularly when deployment involves users with varying technical expertise levels.

Types of AI Models and Platforms to Compare

The landscape of artificial intelligence encompasses numerous categories of models and platforms, each serving distinct purposes and offering unique capabilities. Large language models have captured significant attention recently, with offerings from OpenAI, Anthropic, Google, and others competing for dominance in natural language understanding and generation. These models vary significantly in their training data, parameter counts, fine-tuning capabilities, and specialized features. Comparison tools must help users understand not just raw performance differences but also factors like content filtering, bias mitigation strategies, and multilingual capabilities that influence real-world applicability.

Computer vision platforms represent another major category requiring careful comparison. Solutions range from general-purpose image recognition services to specialized systems for medical imaging, autonomous navigation, or industrial quality control. The evaluation criteria for computer vision systems include accuracy across different image types, processing speed, model size, and the ability to handle edge cases. Advanced comparison tools should address domain-specific requirements, such as compliance with medical imaging standards or performance under varying lighting conditions, that generic benchmarks might overlook.

Machine learning platforms and frameworks constitute the foundation for custom AI development, offering varying levels of abstraction and control. From comprehensive cloud-based solutions like AWS SageMaker and Google Cloud AI Platform to open-source frameworks like TensorFlow and PyTorch, the options span a wide spectrum of complexity and capability. Effective comparisons must consider factors including ease of model development, training infrastructure scalability, deployment options, and ecosystem maturity. The choice between platforms often depends on organizational expertise, existing infrastructure investments, and specific project requirements.

Specialized AI services for specific business functions have proliferated as vendors recognize the value of domain-specific solutions. These include customer service chatbots, predictive analytics platforms, recommendation engines, and automated content generation tools. Comparing these specialized services requires understanding industry-specific requirements and evaluating how well each solution addresses particular business challenges. The assessment should consider not just technical capabilities but also factors like regulatory compliance, industry-specific integrations, and vendor expertise in relevant domains.

Methodology for Comparing AI Solutions

Establishing a systematic methodology for AI comparison ensures comprehensive evaluation and reduces the risk of overlooking critical factors. The process begins with clearly defining requirements and use cases, as the optimal AI solution varies dramatically depending on specific application needs. Organizations should document functional requirements, performance expectations, budget constraints, and timeline considerations before beginning comparisons. This foundational work prevents scope creep and ensures evaluation efforts remain focused on relevant criteria.

Creating a weighted scoring framework helps prioritize different evaluation criteria based on organizational priorities. While one company might prioritize cutting-edge performance above all else, another might value stability, support quality, or cost-effectiveness more highly. The scoring framework should reflect these priorities through appropriate weighting of different factors. Aizolo’s comparison tool facilitates this process by allowing users to customize evaluation criteria and adjust weightings to match their specific needs, ensuring personalized and relevant comparison results.

Conducting proof-of-concept implementations provides invaluable insights that specifications and benchmarks alone cannot capture. These limited deployments reveal practical challenges, integration complexities, and performance characteristics under real-world conditions. The methodology should include clear success criteria for proof-of-concepts, systematic documentation of findings, and processes for gathering feedback from all stakeholders. This hands-on evaluation phase often uncovers critical factors that influence long-term success but might not appear in standard comparisons.

Incorporating multiple stakeholder perspectives ensures comprehensive evaluation that addresses diverse organizational needs. Technical teams focus on implementation details and performance characteristics, while business stakeholders prioritize ROI and strategic alignment. End users care most about usability and functionality, while compliance teams examine regulatory and security implications. Effective comparison methodologies gather and synthesize input from all relevant groups, creating a holistic view of each AI solution’s potential impact.

Performance Metrics and Benchmarks

Understanding and interpreting AI performance metrics requires familiarity with various measurement approaches and their implications for real-world applications. Accuracy metrics like precision, recall, and F1 scores provide fundamental insights into model performance but must be interpreted within specific use case contexts. A model with slightly lower accuracy but significantly faster processing speed might be preferable for real-time applications, while batch processing scenarios might prioritize accuracy over speed. Comparison tools should present these trade-offs clearly, helping users understand the practical implications of different performance profiles.

Latency and throughput measurements critically impact user experience and system design decisions. Response time requirements vary dramatically between applications, from milliseconds for autonomous vehicle decision-making to seconds or minutes for batch analytics processes. Throughput considerations become particularly important at scale, where small per-request differences multiply into significant operational impacts. Advanced comparison tools should provide latency distributions rather than just averages, revealing performance consistency and outlier behavior that affects reliability.

Resource utilization metrics including memory consumption, CPU usage, and storage requirements influence both deployment costs and architectural decisions. Some AI models achieve impressive performance through massive computational requirements that might be impractical for certain deployments. Others optimize for efficiency at the expense of peak performance. Understanding these trade-offs helps organizations select solutions that align with their infrastructure capabilities and budget constraints. Comparison platforms should clearly communicate resource requirements across different deployment scenarios and scaling levels.

Robustness and reliability metrics assess how AI systems perform under adverse conditions or when encountering unexpected inputs. This includes evaluating performance degradation with noisy data, handling of edge cases, and recovery from errors. These characteristics often prove more important than peak performance in production environments where stability and predictability are paramount. Comprehensive comparison tools should include stress testing results, failure mode analyses, and reliability statistics that inform risk assessment and mitigation planning.

Cost Considerations and ROI Analysis

The financial implications of AI adoption extend well beyond initial licensing or subscription fees, encompassing a complex web of direct and indirect costs that comparison tools must help organizations understand. Infrastructure costs for running AI workloads can dwarf software expenses, particularly for compute-intensive models requiring specialized hardware like GPUs or TPUs. Cloud-based deployments offer flexibility but can generate substantial ongoing expenses, while on-premise installations require significant upfront investment but may provide better long-term economics for consistent workloads. Effective comparison tools model these scenarios comprehensively, accounting for factors like data transfer costs, storage requirements, and backup needs.

Training and customization expenses represent often-underestimated cost components that vary significantly across AI solutions. Some platforms require extensive fine-tuning to achieve acceptable performance for specific use cases, involving both computational costs and expert labor. Others offer pre-trained models that work effectively out-of-the-box but may lack the flexibility for specialized applications. The comparison process should evaluate the total cost of achieving desired performance levels, including data preparation, model training, validation, and ongoing refinement efforts.

Operational costs encompass the ongoing expenses of maintaining, monitoring, and updating AI systems in production. This includes personnel costs for teams managing AI infrastructure, licensing fees for supporting tools and platforms, and expenses related to compliance and governance requirements. Some AI solutions require specialized expertise that commands premium salaries, while others emphasize accessibility and ease of management. Understanding these operational implications helps organizations budget accurately and avoid unexpected expenses that could undermine ROI projections.

Return on investment calculations for AI initiatives must consider both quantifiable benefits and strategic value that may be harder to measure directly. Quantifiable benefits might include labor cost savings through automation, revenue increases from improved customer experiences, or cost reductions from optimized operations. Strategic benefits could encompass competitive advantages, improved decision-making capabilities, or enhanced innovation potential. Aizolo’s platform helps organizations model these various value streams, providing frameworks for comprehensive ROI analysis that supports investment decision-making.

Integration and Compatibility Factors

The success of AI implementation often hinges on how well new solutions integrate with existing technology ecosystems, making compatibility assessment a crucial component of comparison processes. API design and documentation quality significantly influence integration ease and long-term maintainability. Well-designed APIs with comprehensive documentation, code examples, and robust error handling reduce implementation time and ongoing support burden. Comparison tools should evaluate API completeness, consistency, versioning practices, and the availability of client libraries for popular programming languages.

Data format compatibility and transformation requirements can create significant integration challenges if not carefully evaluated. AI solutions may expect data in specific formats, require particular preprocessing steps, or generate outputs that need transformation before downstream consumption. Some platforms provide flexible data handling capabilities that accommodate various formats, while others impose rigid requirements that necessitate additional data pipeline development. Understanding these requirements early prevents costly surprises during implementation and helps accurately estimate integration effort.

Security and compliance integration represents a critical consideration for organizations operating in regulated industries or handling sensitive data. AI solutions must align with existing security architectures, support required authentication and authorization mechanisms, and comply with relevant regulations like GDPR, HIPAA, or industry-specific standards. This includes evaluating data residency options, encryption capabilities, audit logging features, and vendor security certifications. Comparison tools should highlight compliance-relevant features and help organizations assess whether specific AI solutions meet their regulatory requirements.

Scalability and performance integration concerns how well AI solutions adapt to changing demands and integrate with existing scaling strategies. Some platforms offer automatic scaling capabilities that seamlessly handle load variations, while others require manual intervention or additional infrastructure provisioning. The ability to distribute workloads across multiple instances, implement caching strategies, and optimize resource utilization affects both performance and cost at scale. Effective comparisons should examine scaling mechanisms, performance under load, and compatibility with existing infrastructure automation tools.

Popular AI Comparison Platforms and Tools

The market for AI comparison tools has evolved to include various platforms, each offering unique approaches to evaluation and selection. Comprehensive platforms like Aizolo provide side-by-side comparisons across multiple AI vendors and models, enabling users to evaluate options systematically across standardized criteria. These platforms typically offer features including performance benchmarks, cost calculators, user reviews, and detailed feature comparisons that facilitate informed decision-making. The best platforms continuously update their data to reflect the rapidly evolving AI landscape and provide tools for custom evaluation scenarios.

Specialized benchmarking platforms focus on specific aspects of AI performance, offering deep technical insights for particular use cases or model types. Academic institutions and research organizations often maintain these platforms, providing rigorous, peer-reviewed evaluation methodologies. While these specialized tools offer exceptional depth in their focus areas, they may lack the breadth needed for comprehensive business decision-making. Organizations benefit from combining insights from specialized benchmarks with broader comparison platforms to achieve thorough evaluation.

Community-driven comparison resources leverage collective knowledge and experience to provide real-world insights beyond vendor specifications and controlled benchmarks. Forums, user groups, and collaborative platforms enable practitioners to share implementation experiences, discuss challenges, and offer recommendations based on actual deployments. These resources prove particularly valuable for understanding practical considerations that formal evaluations might miss, such as vendor support quality, documentation accuracy, and long-term stability.

Vendor-provided comparison tools offer detailed information about specific platforms but require careful interpretation due to inherent biases. While these tools often provide valuable technical details and use case examples, organizations should validate vendor claims through independent sources and hands-on evaluation. The most useful vendor tools acknowledge limitations honestly, provide transparent methodologies, and facilitate fair comparisons with competitors rather than simply promoting their own solutions.

Best Practices for Using AI Comparison Tools

Maximizing the value derived from AI comparison tools requires adopting systematic approaches that ensure comprehensive evaluation while maintaining efficiency. Organizations should begin by establishing clear evaluation criteria aligned with business objectives, technical requirements, and strategic priorities. This foundation prevents scope creep and ensures comparison efforts remain focused on factors that genuinely impact success. Regular review and refinement of evaluation criteria help adapt to changing needs and emerging priorities.

Combining multiple comparison tools and methodologies provides more robust insights than relying on any single source. Different tools excel at evaluating different aspects of AI solutions, and triangulating findings across multiple platforms reduces the risk of overlooking critical factors. Organizations should blend quantitative metrics from benchmarking platforms with qualitative insights from user communities and hands-on evaluation through proof-of-concepts. This multi-faceted approach creates a comprehensive understanding that supports confident decision-making.

Involving diverse stakeholders throughout the comparison process ensures all perspectives receive appropriate consideration. Technical teams provide essential insights into implementation feasibility and architectural implications, while business stakeholders ensure alignment with strategic objectives and ROI expectations. End users offer valuable input about usability and functionality requirements, and compliance teams identify regulatory considerations. Creating structured processes for gathering and synthesizing stakeholder input prevents important concerns from being overlooked.

Documenting comparison findings and decision rationales creates valuable organizational knowledge that benefits future initiatives. Detailed documentation should capture not just final selections but also the evaluation process, key trade-offs considered, and factors that influenced decisions. This information proves invaluable for future AI initiatives, helping teams learn from past experiences and refine evaluation approaches. Documentation also provides transparency that builds stakeholder confidence and facilitates knowledge transfer as team members change.

Common Pitfalls in AI Model Comparison

Organizations frequently encounter several common pitfalls when comparing AI solutions, and awareness of these challenges helps avoid costly mistakes. Over-emphasis on single metrics like accuracy or speed without considering broader implications represents a frequent error. While impressive benchmark scores attract attention, they may not translate to real-world success if other factors like integration complexity, operational costs, or reliability issues undermine deployment. Effective comparison requires balancing multiple factors rather than optimizing for single dimensions.

Insufficient attention to total cost of ownership leads to budget overruns and failed initiatives when hidden costs emerge during implementation or operation. Organizations often focus on visible costs like licensing fees while underestimating expenses related to infrastructure, integration, training, and ongoing maintenance. Comprehensive cost analysis should include all direct and indirect expenses across the full lifecycle of AI deployment. Aizolo’s comparison platform helps organizations identify and quantify these various cost components through detailed TCO modeling.

Neglecting scalability considerations during initial evaluation creates problems when AI solutions must handle growing workloads or expanded use cases. Solutions that perform well in proof-of-concepts may struggle at production scale due to architectural limitations, licensing constraints, or resource requirements that increase non-linearly. Comparison processes should explicitly evaluate scalability characteristics including performance at various load levels, scaling mechanisms, and associated cost implications. This forward-looking perspective prevents the need for disruptive platform changes as requirements evolve.

Failing to account for organizational readiness and capability gaps results in implementation challenges that comparison tools alone cannot address. The best AI solution from a technical perspective may prove impractical if an organization lacks the expertise, infrastructure, or processes needed for successful deployment. Honest assessment of organizational capabilities should inform comparison criteria and influence solution selection. This includes evaluating available expertise, existing infrastructure, change management capabilities, and cultural readiness for AI adoption.

Future Trends in AI Comparison Tools

The evolution of AI comparison tools continues to accelerate, driven by rapid advances in artificial intelligence technology and growing sophistication in evaluation methodologies. Automated comparison capabilities powered by AI itself represent an emerging trend, with platforms beginning to use machine learning to identify relevant evaluation criteria, predict performance in specific use cases, and recommend optimal solutions based on organizational characteristics. These intelligent comparison systems promise to make evaluation more efficient and accessible while reducing the expertise required for effective assessment.

Real-time comparison capabilities that reflect the dynamic nature of AI development are becoming increasingly important as models and platforms evolve rapidly. Static benchmarks and periodic updates no longer suffice when AI capabilities change monthly or even weekly. Advanced comparison platforms are implementing continuous evaluation systems that automatically test new model versions, track performance changes over time, and alert users to significant developments that might influence selection decisions. This real-time approach ensures organizations always have access to current information for decision-making.

Integration of ethical and responsible AI considerations into comparison frameworks reflects growing awareness of AI’s societal impacts and regulatory requirements. Future comparison tools will increasingly evaluate factors like bias mitigation capabilities, explainability features, environmental impact, and alignment with ethical AI principles. These considerations are evolving from nice-to-have features to essential requirements as regulations strengthen and stakeholders demand responsible AI practices. Comparison platforms that effectively assess these dimensions will become invaluable for organizations navigating complex ethical and regulatory landscapes.

Personalization and contextualization capabilities that tailor comparisons to specific organizational needs and use cases represent another important trend. Rather than providing generic comparisons that users must interpret for their situations, advanced platforms will offer customized evaluations that account for unique requirements, constraints, and priorities. This might include industry-specific comparisons, role-based views, and recommendations based on similar organizations’ experiences. The goal is making comparison tools more actionable and directly relevant to specific decision-making contexts.

Case Studies: Successful AI Selection Using Comparison Tools

Examining real-world examples of organizations successfully using AI comparison tools provides valuable insights into effective evaluation approaches and common success factors. A major financial services firm recently used comprehensive comparison platforms including Aizolo to evaluate natural language processing solutions for customer service automation. By systematically comparing performance metrics, integration requirements, and compliance capabilities across multiple vendors, they identified a solution that reduced response times by 60% while maintaining regulatory compliance. The structured comparison process also revealed unexpected cost savings through optimal sizing and configuration recommendations.

A healthcare technology company leveraged AI comparison tools to select computer vision models for medical imaging analysis. The comparison process went beyond accuracy metrics to evaluate factors including FDA approval status, HIPAA compliance capabilities, and integration with existing PACS systems. Through systematic evaluation using multiple comparison platforms, they identified a solution that not only met technical requirements but also accelerated regulatory approval through pre-validated components. The comparison process reduced evaluation time from months to weeks while providing confidence in the selection decision.

An e-commerce retailer used comparison tools to evaluate recommendation engine platforms for personalizing customer experiences. The evaluation process included performance benchmarks using their actual product catalog and customer data, cost modeling based on projected transaction volumes, and assessment of real-time processing capabilities. By comparing solutions across multiple dimensions, they selected a platform that increased conversion rates by 25% while keeping infrastructure costs within budget. The detailed comparison documentation also facilitated stakeholder buy-in and smooth implementation.

A manufacturing company employed AI comparison methodologies to select predictive maintenance solutions for industrial equipment. The comparison process evaluated not just prediction accuracy but also factors like edge deployment capabilities, integration with industrial protocols, and support for their specific equipment types. Through systematic comparison using specialized industrial AI evaluation tools, they identified a solution that reduced unplanned downtime by 40% and generated ROI within six months. The structured approach also identified complementary AI capabilities that expanded the initial project scope beneficially.

Building Your AI Comparison Framework

Developing an organizational framework for AI comparison ensures consistent, effective evaluation across multiple initiatives and evolving requirements. The framework should begin with clear governance structures that define roles, responsibilities, and decision-making processes for AI evaluation and selection. This includes establishing evaluation committees with appropriate technical and business representation, defining approval workflows, and creating escalation paths for addressing conflicts or challenges. Clear governance prevents evaluation paralysis while ensuring appropriate oversight and stakeholder involvement.

Standardized evaluation templates and checklists promote consistency while reducing effort for subsequent comparisons. These tools should capture common evaluation criteria while allowing flexibility for unique requirements of specific initiatives. Templates might include standard performance benchmarks, cost modeling worksheets, integration assessment checklists, and risk evaluation frameworks. Organizations benefit from regularly updating these tools based on lessons learned and evolving best practices. Aizolo’s platform provides customizable templates that organizations can adapt to their specific needs and requirements.

Establishing partnerships with comparison platform providers and industry communities enhances access to information and expertise. These relationships provide early access to new evaluation capabilities, insights into emerging trends, and opportunities to influence platform development based on organizational needs. Active participation in user communities and industry forums also facilitates knowledge sharing and provides access to collective experiences that inform better decision-making. Building these relationships requires ongoing investment but generates substantial value through improved evaluation capabilities and reduced risk.

Creating feedback loops that capture lessons learned from AI implementations improves future comparison efforts. This includes documenting what evaluation criteria proved most predictive of success, which comparison tools provided most valuable insights, and what factors were overlooked in initial assessments. Regular retrospectives after AI deployments should examine how well comparison predictions aligned with actual outcomes and identify opportunities for improving evaluation methodologies. This continuous improvement approach ensures comparison frameworks evolve alongside technological capabilities and organizational maturity.

Conclusion: Maximizing Value from AI Comparison Tools

The strategic importance of effective AI comparison continues growing as artificial intelligence becomes increasingly central to organizational success. The complexity and rapid evolution of AI technologies make systematic comparison essential for navigating the vast landscape of available solutions. Organizations that develop robust comparison capabilities gain competitive advantages through better technology selection, faster implementation, and improved return on AI investments. The investment in comprehensive comparison processes pays dividends through reduced risk, avoided mistakes, and optimal solution selection.

Success with AI comparison tools requires combining technological evaluation with business strategy, organizational capabilities, and implementation realities. The best comparison approaches balance quantitative metrics with qualitative insights, technical requirements with business objectives, and current needs with future scalability. Organizations should view AI comparison as an ongoing capability rather than a one-time activity, continuously refining approaches based on experience and evolving requirements. This perspective ensures comparison capabilities mature alongside AI adoption maturity.

The future of AI comparison tools promises even greater sophistication and value as platforms incorporate advanced capabilities for automated evaluation, real-time updates, and personalized recommendations. Organizations should prepare for this evolution by building flexible comparison frameworks that can incorporate new tools and methodologies as they emerge. Staying current with comparison platform developments and industry best practices ensures continued ability to make informed AI decisions in an rapidly evolving landscape.

For organizations seeking to enhance their AI comparison capabilities, Aizolo provides comprehensive side-by-side comparison tools that streamline evaluation across multiple AI platforms and models. The platform’s sophisticated features for performance benchmarking, cost analysis, and integration assessment enable systematic evaluation that supports confident decision-making. By leveraging advanced comparison tools and following best practices outlined in this guide, organizations can navigate the complex AI landscape effectively and select solutions that deliver maximum value for their specific needs and objectives.

Additional Resources and References

To further enhance your AI comparison capabilities and stay informed about the latest developments in artificial intelligence evaluation, consider exploring these valuable external resources:

- The Stanford Institute for Human-Centered Artificial Intelligence provides research-based insights into AI capabilities and evaluation methodologies

- MIT Technology Review’s AI section offers analysis of emerging AI trends and technologies

- The Partnership on AI develops best practices for responsible AI evaluation and deployment

- Google’s AI Principles provide frameworks for evaluating AI solutions against ethical considerations

For hands-on AI comparison and evaluation, visit Aizolo to access powerful side-by-side comparison tools that streamline your AI selection process. The platform’s comprehensive features and continuous updates ensure you always have access to current information for making informed AI decisions that drive organizational success.