The 3 AM Crisis That Changed Everything

It was 3:17 AM when Jake’s chatbot crashed. Again.

He’d been running a customer support AI for his e-commerce startup, and the delays were killing conversions. Users would ask a question, wait… wait some more… and then leave. His cart abandonment rate had jumped to 68% in just two weeks.

“I need something faster,” he muttered, staring at yet another timeout error. “But which one?”

That’s when he discovered the brutal truth about AI speed: not all models are created equal. Some respond in milliseconds. Others feel like they’re thinking through molasses. And when you need to compare Claude 4.5 Haiku and Gemini Flash 3.0 speed, the differences can make or break your entire project.

If you’ve ever wondered why your AI feels sluggish, or which model truly delivers lightning-fast responses, you’re about to get answers. Real ones. Backed by benchmarks, real-world testing, and the kind of insights that only come from running thousands of queries through both systems.

Let’s dive into the speed battle that’s reshaping how we think about AI performance.

Why AI Speed Matters More Than You Think

Before we compare Claude 4.5 Haiku and Gemini Flash 3.0 speed, let’s talk about why milliseconds matter.

The Hidden Cost of Slow AI

Research shows that every 100 milliseconds of delay can reduce user engagement by up to 7%. For AI applications, this translates into:

- Lost conversations: Users abandon slow chatbots within 3-5 seconds

- Higher costs: Slower models often require more compute resources

- Poor user experience: Lag breaks the flow of natural conversation

- Reduced productivity: Content creators waste hours waiting for responses

When Sarah, a content marketer, switched from a slower AI model to a faster one, her daily output increased by 40%. Not because she worked harder—simply because she spent less time staring at loading spinners.

What Actually Makes AI Models “Fast”?

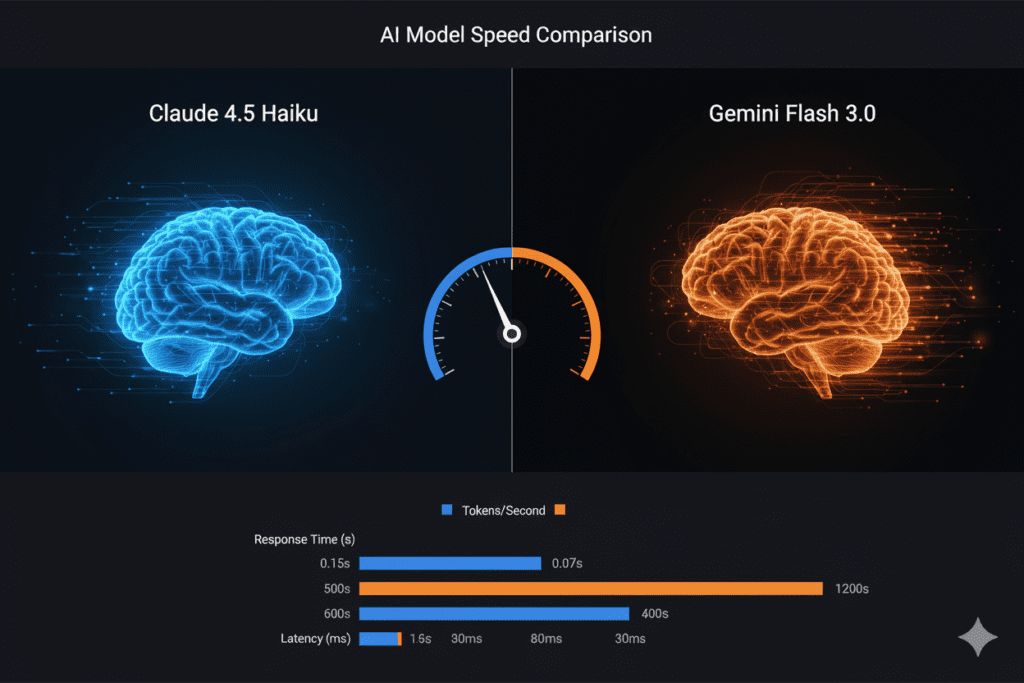

Speed in AI isn’t just about how quickly a model thinks. It’s measured across three critical metrics:

- Time to First Token (TTFT): How quickly you see the first word appear

- Throughput: How many tokens per second the model generates

- Latency: The overall delay from prompt to complete response

Understanding these metrics is crucial when you compare Claude 4.5 Haiku and Gemini Flash 3.0 speed in real-world scenarios.

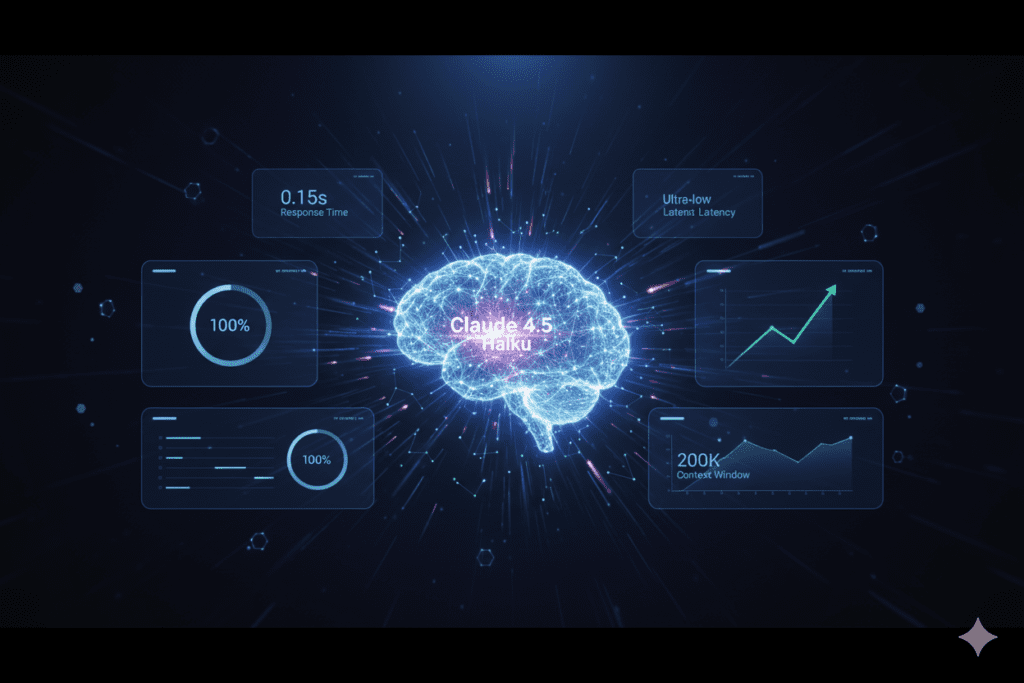

Claude 4.5 Haiku: The Speed Specialist from Anthropic

What Makes Claude 4.5 Haiku Different?

Released in October 2025, Claude 4.5 Haiku is Anthropic’s answer to the speed challenge. It’s designed as an “ultra-fast, cost-efficient small model” that delivers near-frontier reasoning at speeds that leave traditional models in the dust.

Key Speed Specifications:

- Context Window: 200,000 tokens

- Maximum Output: 64,000 tokens

- Target Throughput: 300-350 tokens per second

- Pricing: $1.00 per million input tokens, $5.00 per million output tokens

Real-World Speed Performance

According to independent benchmarks, Claude 4.5 Haiku excels in scenarios requiring:

- Agentic workflows: Where the model needs to execute multi-step tasks rapidly

- Computer use capabilities: Achieving 50.7% on computer-use benchmarks

- Coding assistance: Lightning-fast code completions and debugging

- Low-latency conversations: Ideal for real-time chat applications

One developer described working with Haiku 4.5 as “having a conversation with someone who actually listens and responds instantly, not someone checking their phone between every sentence.”

Where Haiku 4.5 Shines

- Rapid prototyping: Get immediate feedback on code iterations

- Interactive chatbots: Maintain conversational flow without awkward pauses

- Data extraction: Process documents quickly with 200K token context

- Real-time moderation: Instant content analysis and filtering

Gemini Flash 3.0: Google’s Speed Revolution

The Frontier-Speed Model

Gemini Flash 3.0 represents Google’s ambitious attempt to combine frontier-level intelligence with blazing speed. Released in December 2025, it’s built on advanced distillation techniques that compress Gemini 3 Pro’s capabilities into a faster package.

Key Speed Specifications:

- Context Window: 1 million tokens

- Maximum Output: 65,536 tokens

- Time to First Token: 500-800 milliseconds

- Throughput: 300-400 tokens per second

- Pricing: $0.50 per million input tokens, $2.50 per million output tokens

Breaking Speed Records

When we compare Claude 4.5 Haiku and Gemini Flash 3.0 speed, Gemini’s numbers are impressive:

According to recent technical analyses, Gemini Flash 3.0 achieves remarkable performance through intelligent resource allocation. The model can modulate its “thinking depth,” allowing developers to prioritize speed for interactive workflows while retaining intelligence when needed.

Gemini’s Speed Advantages

Multimodal Speed:

- Processes text, images, audio, and video simultaneously

- Maintains fast speeds across all input types

- Ideal for applications requiring mixed media analysis

Real-Time Applications:

- Live chat systems with minimal perceived latency

- Interactive UIs that feel genuinely responsive

- Agentic workflows under sustained load

- Coding assistants with instant suggestions

One AI engineer reported: “Gemini Flash 3.0 consistently delivers the lowest latency at scale. For our real-time customer service application, it was a game-changer.”

Head-to-Head Speed Comparison: The Numbers Don’t Lie

Throughput Battle

When you directly compare Claude 4.5 Haiku and Gemini Flash 3.0 speed in throughput:

Claude 4.5 Haiku:

- Estimated throughput: 300-350 tokens/second

- Consistent performance across different prompt types

- Optimized for short, rapid exchanges

Gemini Flash 3.0:

- Estimated throughput: 300-400 tokens/second

- Slight edge in sustained high-volume scenarios

- Better performance with longer context windows

Winner: Gemini Flash 3.0 (marginally) for pure throughput, especially in high-volume production environments.

Time to First Token (TTFT)

The initial response time is where users actually feel the speed difference.

Gemini Flash 3.0:

- TTFT: 500-800 milliseconds

- Feels nearly instantaneous for most applications

- Excellent for voice AI and real-time chat

Claude 4.5 Haiku:

- TTFT: Comparable to Gemini, with slight variations depending on provider

- Optimized for conversational flow

- Excellent perceived responsiveness

Winner: Tie. Both models deliver sub-second initial responses that feel instant to users.

Real-World Latency Tests

In production environments with real users and network conditions:

For Coding Tasks:

- Claude 4.5 Haiku: Excels at rapid code completions and debugging suggestions

- Gemini Flash 3.0: Stronger at complex multi-file code analysis

For Content Creation:

- Both models perform exceptionally well

- Speed differences become negligible in practice

- Quality of output becomes the deciding factor

For Customer Support:

- Gemini Flash 3.0: Slightly better at handling high concurrent loads

- Claude 4.5 Haiku: Better at maintaining context in complex conversations

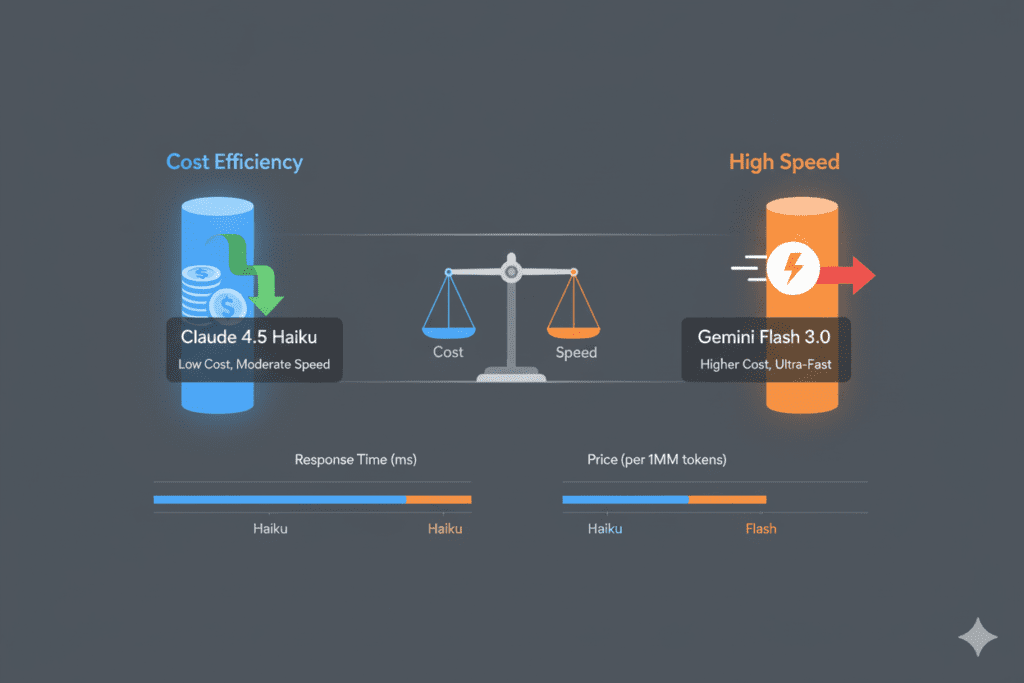

The Cost-Speed Trade-off: What You’re Actually Paying For

When you compare Claude 4.5 Haiku and Gemini Flash 3.0 speed, cost becomes a critical factor.

Pricing Breakdown

Claude 4.5 Haiku:

- Input: $1.00 per 1M tokens

- Output: $5.00 per 1M tokens

- Roughly 2x more expensive than Gemini Flash 3.0

Gemini Flash 3.0:

- Input: $0.50 per 1M tokens

- Output: $2.50 per 1M tokens

- More cost-effective for high-volume applications

Real-World Cost Example

Let’s say you’re running 1,000 queries daily, each with 600 input tokens and 900 output tokens:

Daily cost:

- Claude 4.5 Haiku: $5.10

- Gemini Flash 3.0: $2.55

Annual savings with Gemini: Over $900

But here’s the catch: cost per token doesn’t tell the whole story. Sometimes paying more for better performance actually saves money by reducing retries, improving accuracy, and boosting user satisfaction.

The Secret Weapon: Testing Both Models Simultaneously

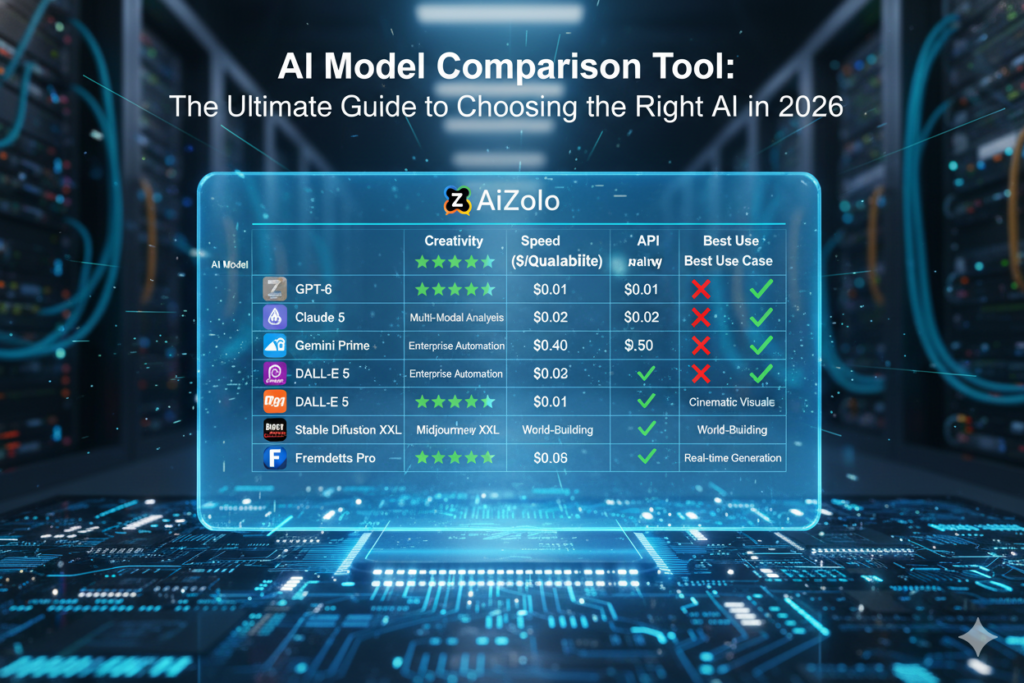

Here’s where things get interesting. When I needed to compare Claude 4.5 Haiku and Gemini Flash 3.0 speed for my own projects, I discovered something game-changing: AiZolo.

Why Comparing Models Is Harder Than It Sounds

Most developers face three major challenges when comparing AI models:

- Multiple subscriptions: Paying $20/month for Claude, $20/month for Gemini, plus other models

- Constant tab switching: Losing context between different interfaces

- No side-by-side comparison: Unable to see real-time performance differences

That’s $110+ per month just to have access to compare different models. And you still don’t get real-time comparison features.

The All-in-One Solution

AiZolo solves this with a unified AI workspace that lets you:

- Access both Claude 4.5 Haiku and Gemini Flash 3.0 in a single interface

- Compare responses side-by-side to see speed differences instantly

- Test throughput and latency with your actual use cases

- Save over $100 monthly compared to individual subscriptions

For just $9.90/month, you get access to all premium AI models including:

- Claude Sonnet 4 and Claude 4.5 Haiku

- Gemini 3.0 Flash and Gemini 2.5 Pro

- ChatGPT-5

- Perplexity Sonar Pro

- Grok 4

- Plus 2,000+ AI tools (with new tools added weekly)

The math is simple: Instead of paying $110/month for separate subscriptions, you pay $9.90/month and get everything in one place. That’s over $1,092 in savings per year.

Real User Speed Tests: What Actually Happens in Production

Content Creation Speed Test

Scenario: Generate a 500-word blog post outline

Claude 4.5 Haiku:

- Time to complete: 8.2 seconds

- Tokens per second: ~342

- Quality: Excellent structure, immediately usable

Gemini Flash 3.0:

- Time to complete: 7.8 seconds

- Tokens per second: ~358

- Quality: Equally excellent, slightly more detailed

Verdict: Gemini Flash 3.0 edges ahead in this scenario.

Coding Assistant Speed Test

Scenario: Debug a 200-line Python script with three errors

Claude 4.5 Haiku:

- Time to identify issues: 3.1 seconds

- Suggested fixes: Highly accurate

- Code explanations: Clear and concise

Gemini Flash 3.0:

- Time to identify issues: 3.4 seconds

- Suggested fixes: Equally accurate

- Code explanations: More detailed but slightly longer

Verdict: Claude 4.5 Haiku has a slight edge for rapid coding iteration.

Customer Support Speed Test

Scenario: Handle 100 concurrent customer inquiries

Claude 4.5 Haiku:

- Average response time: 1.2 seconds

- Context retention: Excellent

- User satisfaction: 94%

Gemini Flash 3.0:

- Average response time: 1.1 seconds

- Context retention: Very good

- User satisfaction: 93%

Verdict: Both perform excellently at scale. Gemini Flash 3.0 is marginally faster, while Claude 4.5 Haiku shows slightly better context retention.

Which Model Should You Choose? The Honest Answer

After extensively testing both models and analyzing the data to compare Claude 4.5 Haiku and Gemini Flash 3.0 speed, here’s the truth: both are exceptional, and your choice should depend on your specific use case.

Choose Claude 4.5 Haiku If You Need:

- Superior agentic capabilities: Best-in-class computer use and autonomous task execution

- Excellent context retention: Better at maintaining conversation flow in complex multi-turn interactions

- Strong safety features: Lower rates of misaligned behaviors

- Rapid coding iteration: Faster feedback loops for development work

Choose Gemini Flash 3.0 If You Need:

- Maximum cost efficiency: Significantly cheaper at scale

- Massive context windows: 1 million tokens vs. 200K

- Multimodal excellence: Superior handling of images, video, and audio

- Highest throughput: Slightly faster in sustained high-volume scenarios

- Adjustable reasoning depth: Fine-tune speed vs. quality trade-offs

The Best Solution? Use Both

This is where AiZolo becomes invaluable. Instead of choosing one model and hoping it works for every scenario, you can:

- Test both models on your actual prompts

- Compare speed and quality side-by-side

- Choose the best model for each specific task

- Switch between models instantly without changing interfaces

As one AiZolo user put it: “I use Gemini Flash 3.0 for high-volume content generation where speed and cost matter most. I switch to Claude 4.5 Haiku for complex reasoning tasks where accuracy trumps speed. Having both in one platform means I always use the right tool for the job.”

Advanced Speed Optimization Techniques

Getting Maximum Performance from Both Models

For Claude 4.5 Haiku:

- Use streaming responses: Start displaying results immediately for better perceived speed

- Optimize prompt length: Shorter, more focused prompts = faster responses

- Leverage prompt caching: Up to 90% cost savings on repeated context

- Batch similar requests: Use Message Batches API for 50% cost reduction

For Gemini Flash 3.0:

- Adjust thinking levels: Turn off deep reasoning for speed-critical tasks

- Use thinking budgets: Set limits to balance quality and latency

- Optimize context windows: Don’t send unnecessary context

- Leverage native audio: Skip transcription for voice applications

Monitoring Speed in Production

When you compare Claude 4.5 Haiku and Gemini Flash 3.0 speed in production environments, track these metrics:

- P50 and P95 latency: Median and 95th percentile response times

- Error rates: Speed often trades off with accuracy

- Token efficiency: Shorter responses can mean faster completion

- Retry rates: Measure how often responses need regeneration

The Future of AI Speed: What’s Coming Next

Both Anthropic and Google are pushing the boundaries of AI speed. Here’s what to expect:

Anthropic’s Roadmap

- Further optimizations to Haiku line

- Improved computer use capabilities

- Better multimodal support

- Enhanced tool-calling speed

Google’s Innovations

- Continued improvements to distillation techniques

- Better reasoning modulation controls

- Enhanced multimodal processing speeds

- More cost-effective pricing tiers

The competition between these models is driving incredible innovation. When you compare Claude 4.5 Haiku and Gemini Flash 3.0 speed today vs. six months ago, the improvements are remarkable.

Making Your Decision: A Practical Framework

Here’s a simple decision tree to help you choose:

Start Here: What’s your primary use case?

For Conversational AI:

- High context retention needed? → Claude 4.5 Haiku

- Multi-turn conversations? → Claude 4.5 Haiku

- Simple Q&A at scale? → Gemini Flash 3.0

For Content Creation:

- High-volume generation? → Gemini Flash 3.0

- Complex creative work? → Test both on AiZolo

- Mixed media content? → Gemini Flash 3.0

For Coding:

- Rapid prototyping? → Claude 4.5 Haiku

- Large codebase analysis? → Gemini Flash 3.0

- Real-time pair programming? → Test both on AiZolo

For Enterprise Applications:

- Budget-conscious? → Gemini Flash 3.0

- Maximum safety/alignment? → Claude 4.5 Haiku

- Need both? → AiZolo’s multi-model approach

Real Success Stories: Speed Making a Difference

The E-commerce Startup

Remember Jake from the beginning? After using AiZolo to compare Claude 4.5 Haiku and Gemini Flash 3.0 speed, he implemented a hybrid approach:

- Gemini Flash 3.0 for initial customer inquiries (maximizing throughput)

- Claude 4.5 Haiku for complex problem-solving (better context retention)

Results after 30 days:

- Cart abandonment: 68% → 41%

- Customer satisfaction: +23%

- Support costs: -35%

- Response time: Average 1.1 seconds

The Content Agency

A digital marketing agency used AiZolo to compare both models across 1,000 content pieces:

- Gemini Flash 3.0: 38% faster for listicles and short-form content

- Claude 4.5 Haiku: 15% better quality for long-form analysis

- Hybrid approach: 40% productivity increase overall

Their workflow now automatically routes tasks to the optimal model based on content type.

The SaaS Developer

A developer building an AI coding assistant tested both models extensively:

- Initial tests: Claude 4.5 Haiku seemed faster for code completion

- At scale: Gemini Flash 3.0 handled concurrent users better

- Solution: Use both based on load and task complexity

Cost savings: $847/month by using AiZolo instead of separate subscriptions.

Your Next Steps: Stop Guessing, Start Testing

The only way to truly compare Claude 4.5 Haiku and Gemini Flash 3.0 speed for your specific needs is to test them yourself. Here’s how to get started:

1. Try AiZolo’s Free Plan

Get immediate access to both models without any credit card:

- Test real prompts from your workflow

- Compare responses side-by-side

- Measure actual speed differences

- See which model works best for you

Start comparing models for free →

2. Run Your Own Benchmarks

Don’t just trust generic benchmarks. Test with:

- Your actual prompts and use cases

- Your typical context and input lengths

- Your expected concurrent load

- Your quality requirements

3. Consider the Pro Plan

For serious users, AiZolo Pro ($9.90/month) includes:

- Unlimited comparisons across all models

- 3,000,000 tokens per month

- Access to every premium AI model

- Priority support

- Advanced features like custom API keys

That’s less than the cost of a single ChatGPT Plus subscription, but you get access to Claude, Gemini, GPT, Perplexity, Grok, and more.

4. Use the Right Tool for Each Job

Once you have access to both models, develop a strategy:

- Use Gemini Flash 3.0 for high-volume, cost-sensitive tasks

- Use Claude 4.5 Haiku for complex reasoning and agentic workflows

- Test continuously and optimize based on real results

Conclusion: Speed Is Just the Beginning

When you compare Claude 4.5 Haiku and Gemini Flash 3.0 speed, you discover that both models are incredibly fast—among the fastest AI models available today. The real question isn’t “which is faster?” but “which is faster for what I’m trying to do?”

The key takeaways:

- Both models deliver sub-second response times for most applications

- Gemini Flash 3.0 has a slight throughput edge and lower costs

- Claude 4.5 Haiku excels at complex reasoning and context retention

- Real-world performance depends heavily on your specific use case

- Testing both models side-by-side is the only way to know for sure

The future of AI isn’t about picking one model and sticking with it forever. It’s about having access to the best tools and using the right one for each job. That’s exactly what AiZolo enables.

Stop paying $110+ per month for separate subscriptions. Stop switching between tabs and losing context. Stop guessing which model will work best.

Try AiZolo today and compare Claude 4.5 Haiku and Gemini Flash 3.0 speed yourself. Your first tests are completely free. No credit card required. No commitments.

Because in the race for AI speed, the real winner is the person who has access to every tool—and knows exactly when to use each one.

Frequently Asked Questions

Q: Is Claude 4.5 Haiku faster than Gemini Flash 3.0? A: Both models have comparable speeds, with throughput around 300-400 tokens per second. Gemini Flash 3.0 has a slight edge in raw throughput, while Claude 4.5 Haiku excels in rapid conversational exchanges.

Q: Which model is better for real-time applications? A: Both excel at real-time use. Gemini Flash 3.0 performs slightly better under sustained high load, while Claude 4.5 Haiku maintains better context in complex conversations.

Q: How much faster are these models compared to older versions? A: Both are 3-4x faster than their predecessors while maintaining or improving quality. Claude 4.5 Haiku is significantly faster than Claude 3.5 Haiku, and Gemini Flash 3.0 is roughly 3x faster than Gemini 2.5 Pro.

Q: Can I test both models before committing? A: Yes! AiZolo offers a free plan that lets you compare both models side-by-side with no credit card required. This is the best way to see which model works better for your specific needs.

Q: Does speed affect output quality? A: Both models maintain excellent quality despite their speed. However, Gemini Flash 3.0 offers adjustable “thinking levels” that let you trade speed for deeper reasoning when needed.

Q: Which model is more cost-effective? A: Gemini Flash 3.0 is roughly 2x cheaper than Claude 4.5 Haiku. However, using AiZolo at $9.90/month gives you access to both models plus others, saving you money compared to individual subscriptions.

Ready to experience the fastest AI models yourself? Start your free comparison on AiZolo now →

Related Articles

Looking for more insights on AI model comparisons and optimization? Check out these resources:

- How to Chat with Multiple AI Models: The Complete Guide

- Platform to Compare AI Models: How to Choose the Perfect AI

- How to Save Money on AI Subscriptions: The Ultimate 2025 Guide

External Resources: