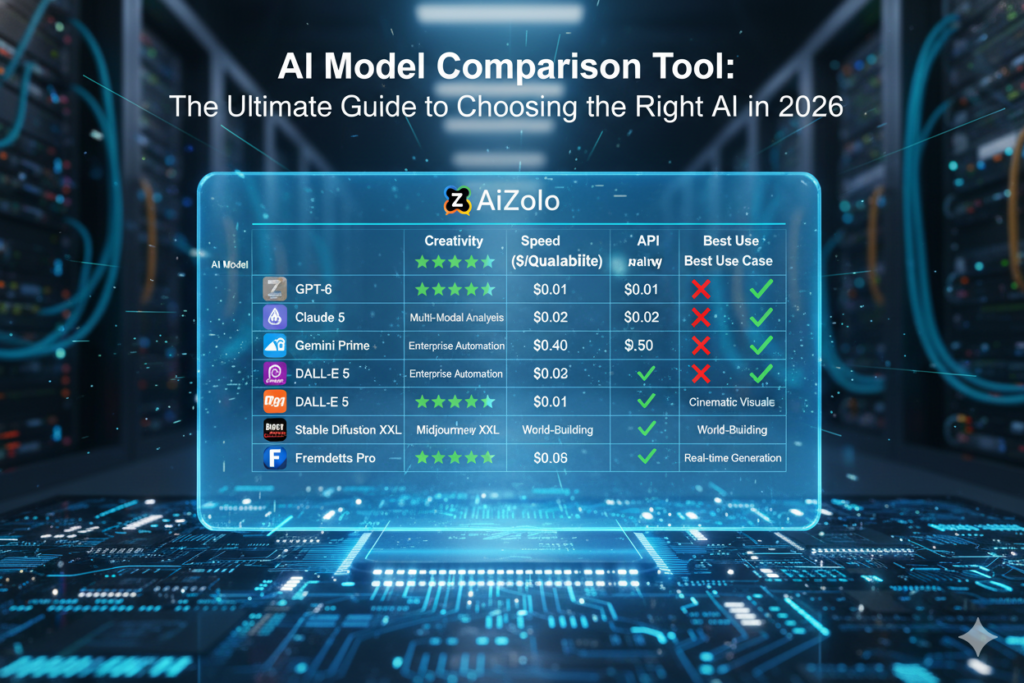

Artificial intelligence (AI) models have become ubiquitous tools for writing, research, teaching, and more. But not all AI models are the same. When you ask the same question or give the same prompt to ChatGPT, Claude, Gemini, or another model, you may get very different answers in style, content, or even accuracy. That’s why testing AI output across multiple models is so valuable. By comparing different AI engines side by side, you can spot differences in tone, creativity, factual correctness, and reliability. In this guide, we’ll dive deep into why multi-model comparison matters and how to do it effectively. We’ll highlight real-world scenarios (education, content creation, research, customer support, software development, marketing), outline key dimensions to compare (tone, accuracy, creativity, hallucination, speed, cost), and recommend platforms (like Aizolo, Poe, ChatHub, Janitor AI, Ithy, SNEOS, etc.) to test multiple models at once. You’ll also find example prompts for writing, research, technical, and education tasks, plus tips on choosing the right model for each situation.

Why Compare AI Models? AI models (like OpenAI’s GPT, Anthropic’s Claude, Google’s Gemini, Mistral’s open LLMs, and many others) all have unique strengths and weaknesses. One might be more factual but less creative, while another might be more expressive but prone to embellishment. By testing outputs across different models, you can: get multiple perspectives, catch errors or hallucinations in one model, match a model’s strengths to your task, and even identify biases. It’s a bit like using different search engines for web queries: Google, Bing, and DuckDuckGo might each rank results differently, so consulting multiple sources gives a fuller picture. Similarly, aggregating AI outputs helps you “triangulate” the best answer. In fact, a recent analysis of AI chat tools found that “the main advantage of tools that aggregate and query multiple language models is that they help determine which model provides the best output, as well as identify biased results”ncbar.org. Testing multiple AIs is also a hedge against hallucination: some models hallucinate (make up facts) more than others, and by comparing you can spot and correct those errors.

Multi-Model AI Comparison: Key Dimensions

When evaluating different AI outputs, consider several dimensions. Each model has its own voice, accuracy, creativity, reliability, speed, and cost profile. Comparing them on these dimensions helps you pick the right one for your needs:

- Tone and Style. Models differ in formality, humor, and verbosity. For example, GPT-4/GPT-5 tends to answer factually and can be a bit dry, whereas Claude often uses a more conversational, witty styleblog.type.ai. In one study, Claude even “landed a joke” in an answer that ChatGPT only clumsily hinted atblog.type.ai. Similarly, Google’s Gemini is designed for multimodal tasks and might incorporate examples or visuals in its answers. By comparing outputs side by side, you can see which voice fits your audience.

- Factual Accuracy and Hallucination. Some models hallucinate less. For instance, OpenAI reports that GPT-4 Turbo has a very low hallucination rate (~1.7%)blog.type.ai. Newer Claude models have also improved fact-checking and guardrailsblog.type.ai, though their exact error rates aren’t always public. Gemini 1.5 (Google’s model) is promising but still new; earlier versions had higher error rates (one report found a 9.1% hallucination rate in Gemini 1.5)blog.type.ai. When accuracy is critical (e.g. legal or medical info), you might weight this factor heavily. Multi-model testing lets you detect when one model’s answer conflicts with another’s.

- Creativity and Fluency. For creative writing or marketing copy, some models may excel. Claude has a reputation for expressive, “human-like” writing with humor and nuanceblog.type.aiblog.type.ai. GPT can also be creative, but it’s often more straightforward. If you ask both to write a story or poem, you might find Claude adds imaginative details or playful language. By running the same creative prompt across models, you can pick the catchiest output or combine ideas from each.

- Multimodality. Some AIs can handle images, audio, or code inputs natively. For example, Gemini 2.5 can process video clips, audio recordings, and images, all in one conversationblog.type.ai. If your use case involves non-text (like summarizing a lecture recording or analyzing a photo), comparing responses from a text-only model versus a multimodal one is useful.

- Speed and Latency. In real-time applications (customer chatbots, live assistants), response time matters. Benchmarks show differences: small open models like Mistral 8x7B can respond very fast (sub-second) compared to big proprietary modelslatestly.ai. Among major providers, Claude 3.5 Sonnet was found to have lower latency than GPT-4 Turbo, with Gemini 1.5 being slower on long taskslatestly.ai. If you need quick replies, you might favor a faster model or use streaming APIs.

- Cost and Access. Models vary in pricing and availability. GPT-4 and Claude have tiered subscriptions ($20+/month for “Pro” access), while Google’s Gemini is bundled into Google One Premium (around $20/month)blog.type.aiblog.type.ai. Mistral’s open models are free to run if you have the hardware. Each query also uses tokens – large context windows (Gemini and Claude now support up to ~1 million tokensblog.type.ai) can incur more cost. Comparing outputs can help you judge value: e.g. if a cheaper model answers just as well, you save money.

These kinds of side-by-side comparisons (even as text) are invaluable for developers and content creators to evaluate different AI outputs.

Use Cases: Multi-Model Testing in Action

Testing AI outputs across models isn’t just theoretical – it has many practical applications. Here are some examples across fields:

- Education. Teachers and learners can compare how different models explain a concept. For instance, asking “Explain the water cycle to a 5th grader” might elicit a straightforward, textbook-style answer from GPT, versus a narrative or analogy-laden explanation from Claude. By viewing both, educators can choose which is clearer or more engaging. Multiple models can also generate diverse examples or quiz questions on a topic. For example, one might use GPT to draft a definition and Claude to write a fun mnemonic, then merge them. Multi-model tools let students see multiple viewpoints, much like a classroom discussion.

- Content Creation and Copywriting. Writers often need fresh ideas or different tones. Suppose a marketer asks, “Write a product description for an eco-friendly water bottle.” They can run this prompt on GPT, Claude, and Gemini side by side. Maybe GPT’s output is concise and formal, while Claude’s is vivid and humorous. The writer can then blend the best parts or choose the tone that matches their brand. Aizolo or Poe, for example, would allow the writer to see those outputs simultaneously and copy the preferred onencbar.org. This speeds up brainstorming and ensures variety. It also helps spot inconsistencies or clichés – if two models repeat the same overused phrase, you’ll notice it.

- Academic and Professional Research. Researchers comparing facts from AI summaries can benefit from multi-model output. If you ask each model to summarize a recent scientific paper, one might include details the others miss. For example, a finance researcher found that GPT-4o outperformed Claude 3.5 in extracting data fields from contracts, but both models only got 60–80% accuracy without special promptingvellum.ai. This tells us to not trust any single output blindly. By testing both models on the same legal text, the researcher can cross-check fields (if GPT finds one item and Claude finds another, the truth is likely one of them or a combination). Multi-model testing tools highlight such differences, reducing the risk of overlooked errors in critical tasks.

- Customer Service and Support. Companies building chatbots can try multiple models to see which yields the most helpful answers. For example, a support prompt like “How do I reset my password?” might get a precise step-by-step from GPT, but Claude might add extra clarifications to reduce confusion. A support engineer could deploy both as fallback: let GPT answer most queries, but if an answer seems incomplete or off, query Claude (or vice versa). Platforms like ChatHub let you set up multiple bots at once to see which one handled the query bestchathub.gg. In fact, users report that seeing multiple bot responses “elevates the work” by letting them pick the best replychathub.gg.

- Software Development and Coding. AI-assisted coding is huge now. Some models are better at code than others. OpenAI’s GPT-4o and Claude 4 have strong coding chops, while smaller models might struggle on complex algorithms. When you prompt “Write a Python function for quicksort,” comparing the outputs side by side can reveal who handled edge cases or comments better. In the Vellum evaluation mentioned earlier, GPT-4o beat Claude 3.5 on several code-related fieldsvellum.ai. A developer can use a multi-model interface to run the same bug-description prompt through each model and see which suggestion is most accurate. This is like code review: instead of one peer, you get many “AI peers” to check your work. Tools that show responses next to each other make this fast.

- Marketing and SEO. Marketers often A/B test content. By generating multiple headlines or ad copy from different models, you can gauge tone and originality. For example, GPT might create a factual headline, while Claude might spice it up with emotion. Testing which one resonates more with your audience (through clicks or engagement) can inform style. Also, when fact-checking stats or claims in marketing copy, comparing models helps avoid mistakes. If one model hallucinates a statistic, another model’s answer or a web search can catch it. Overall, using multiple AIs is like having a small creative team – each “member” contributes unique ideas.

The key idea is that different tasks benefit from different model strengths. Multi-model tools let you sample those strengths quickly. You might find that:

- For writing/editing, Claude’s fluent style is a boonblog.type.ai.

- For raw factual queries, GPT’s data might be more up-to-date and reliable (1.7% hallucinations)blog.type.ai.

- For multimodal content (images/video), Gemini or other Google models shine.

- For code, GPT and specialized coding models typically lead.

By storytelling: imagine Maria, a science teacher. She asks ChatGPT and Claude to explain “photosynthesis” in simple terms. ChatGPT gives a straightforward definition, while Claude adds a fun analogy (“just like making lemonade, plants make food from sunlight”). Maria uses both: she tells her class the solid science from ChatGPT, then shares Claude’s analogy to reinforce the concept. This blend of AI outputs, thanks to multi-model testing, makes learning more engaging.

Tools for Side-by-Side AI Output Comparison

To test AI output across models, you need the right tools. Several platforms let you chat with or query multiple models at once. We especially recommend:

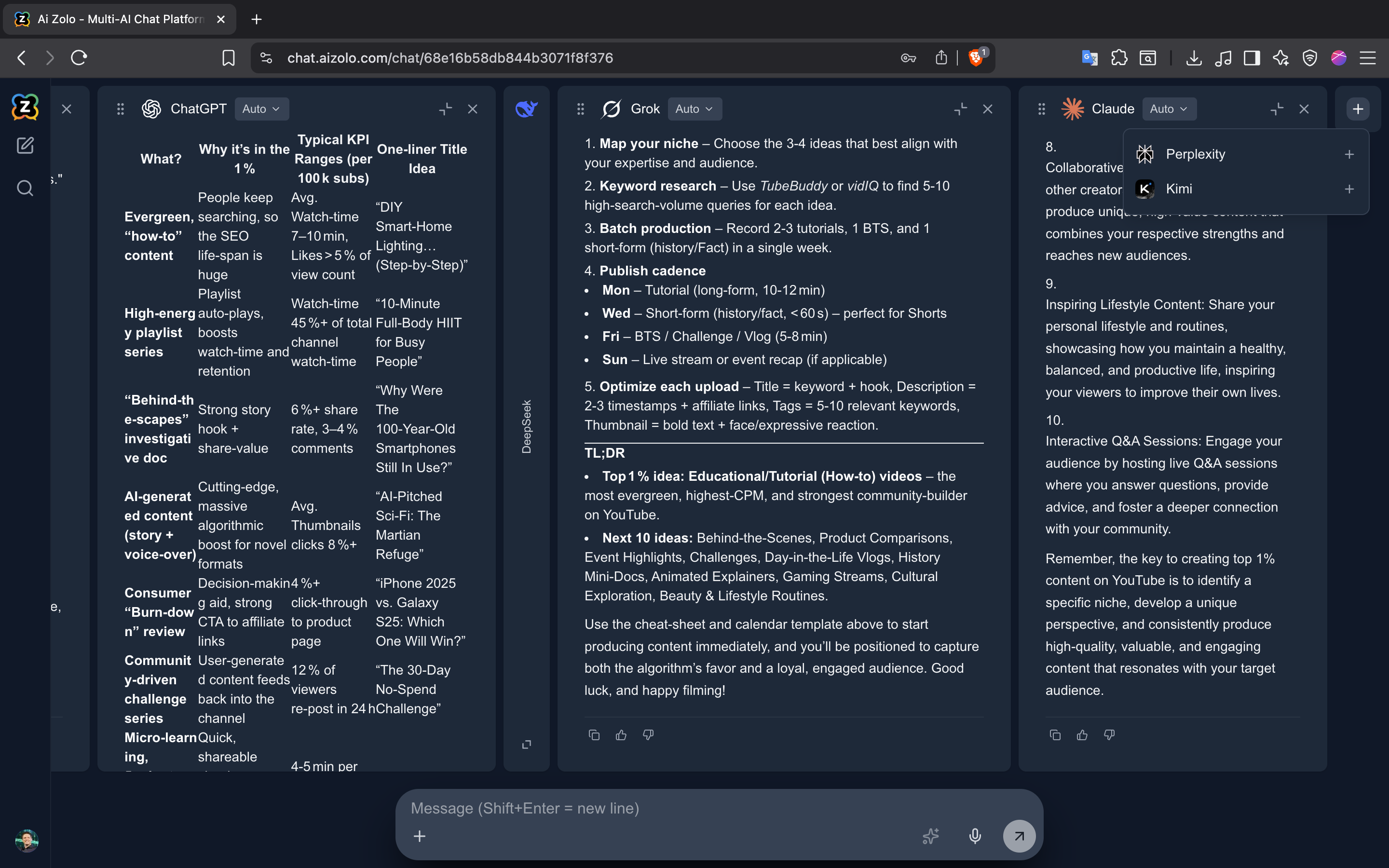

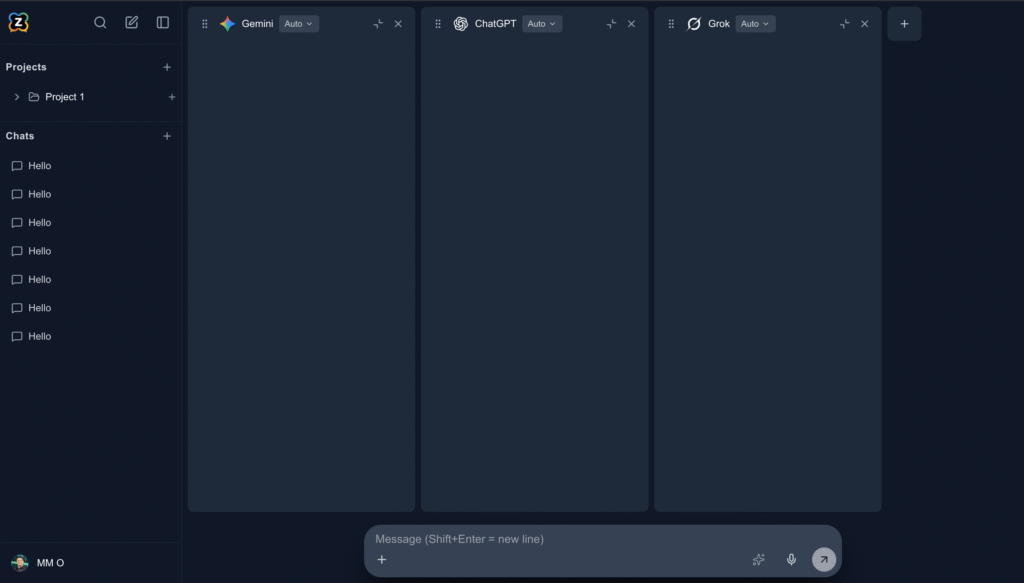

- Aizolo – All-in-One AI Workspace. Aizolo is designed for exactly this purpose. It lets you compare 50+ AI models side by side, using your own API keys if needed, in a customizable workspace. (Think of it as Google Sheets for AI outputs.) Although we can’t cite Aizolo’s docs directly here, their blog describes it as the “All-in-One AI Workspace” for model comparisonaizolo.com. With Aizolo, you can open multiple chat windows simultaneously, prompt them with the same input, and see every output in a grid. You can resize panels, reorder columns, and export results for analysis. This is ideal for any researcher or creator who needs to evaluate several models in parallel. Example: In Aizolo you could put GPT-4o in one column, Claude Sonnet in another, and a local Llama model in a third, then run a prompt like “Summarize this research paper.” The workspace makes it easy to spot differences.

- Poe (Platform for Open Exploration). Poe is Quora’s AI chat aggregatorncbar.org. It provides a unified interface to chat with ChatGPT, Claude, Gemini, DeepSeek, Grok, Llama, and morencbar.org. Poe supports side-by-side conversations: after getting one answer, you can quickly rerun the prompt on another model without retyping. The NCBA article notes that Poe even lets you upload files and then “compare it with other models without needing to retype the prompt”ncbar.org. Poe has free and paid tiers; even the free tier lets you test a variety of bots. It’s user-friendly and mobile-compatible. (One caution: because Poe relays your queries to third-party AI APIs, it warns you not to send sensitive datancbar.org.) Still, for open-ended experiments it’s great.

- ChatHub. ChatHub is a web app (and browser extension) that lets you chat with multiple AI bots at oncechathub.gg. It supports GPT-5 (and older), Claude 4, Gemini 2.5, Llama 3.3, and otherschathub.gg. You can split the screen into multiple chat windows and give each the same prompt. ChatHub even has built-in extra tools like web search, code preview, and image generation that work on any modelchathub.ggchathub.gg. ChatHub users praise it: one review says it’s “Simple but effective” and “great to have all the chat bots in one place”chathub.gg. For example, a marketer might open ChatHub with GPT and Claude tabs, paste the same brand slogan prompt into both, and instantly see which one sounds catchier. ChatHub also has a mobile app and desktop version, making it very accessible.

- Janitor AI. Janitor AI is a bit different: it’s a character-driven chatbot platform, but behind the scenes you can hook it up to multiple LLMs. By default Janitor uses its own experimental LLM, but you can connect external models (OpenAI, KoboldAI, Claude, etc.) via APIsdecodo.com. In practice, Janitor lets you create AI “characters” (e.g. a teacher, a customer support agent, a historical figure) and have them chat. You choose which model powers the character. For multi-model testing, you might clone a Janitor character and link one copy to GPT and another to Claude. Then, interacting with both characters reveals how each model handles the same personality or scenario. Decodo explains that Janitor’s advantage is customization and voice, but notes it’s essentially a front-end for other LLMsdecodo.comdecodo.com. It can be fun for creative tasks. (Note: if you connect it to GPT or Claude APIs, you pay the usual usage feesdecodo.com.)

- Ithy (formerly Arxiv GPT). Ithy is built for research: it aggregates responses from ChatGPT, Google’s Gemini, and Perplexity AI, and then synthesizes them into a unified “article”ncbar.org. When you ask a research question, Ithy shows you each model’s answer side by side and also writes a combined summary. It’s like a mini-paper that cites all three. For example, you could ask a medical question and see which facts each model includes. Ithy even generates a table of contents and references in its combined outputncbar.org. It’s free for up to 10 questions per day (or $120/year for unlimited). Researchers like that you can easily compare the factual content of each model’s answer, spotting contradictions or missing citations.

- SNEOS (Write Once, Get Insights from Multiple AI Models). SNEOS is a simple web tool by developer Victor Antofica. You type in a prompt (or upload a document) and it returns responses from ChatGPT, Claude, and Gemini side by sidencbar.org. It also highlights differences: one panel called “AI Response Comparison” marks where answers diverge and even gives a “best answer” score (usually favoring Gemini in SNEOS’s tests)ncbar.org. For example, in SNEOS you might ask “What’s the capital of Brazil?” and see all three answers; the tool highlights that they all say “Brasília”, reinforcing the fact. It’s great for quick checks. There’s a free version (no login needed) and a $29/month premium with more models and features. (Again, be careful not to paste sensitive data in free tools – these send your prompt to multiple AI APIs.)

These platforms (especially Aizolo and Poe) exemplify AI model testing tools. They let you do “side-by-side AI output” comparisons in real timencbar.orgncbar.org. Just as the NC Bar Association article compares them to meta-search engines, their benefit is that “each displays strengths and weaknesses” and you can “compare or combine results” easilyncbar.org. In practice, legal and technology professionals use these tools to ensure they pick the best answer. One reviewer noted that using ChatHub daily helped them identify which LLM to use for specific use caseschathub.gg, and users of SNEOS reported it was “excellent to have all the chatbots in one place”chathub.gg.

Example Prompts for Multi-Model Testing

To see how multi-model comparison works, it helps to try prompts in different domains. Below are sample prompts you might run in a tool like Aizolo, Poe, or ChatHub, and how you could interpret their outputs:

- Writing/Content Prompt:“Write a friendly blog introduction about the importance of sleep for college students.”

- GPT might produce a clear, concise introduction: it will mention studies and keep a straightforward helpful tone.

- Claude might give a more conversational answer, perhaps starting with a relatable scene (“Imagine you’re cramming for finals at 2 AM…”) and using humor.

- Gemini might even offer to pull in an image suggestion or lay out subheadings, depending on its features.

By comparing, you could pick the tone you prefer (formal vs casual), or combine lines from each. For instance, you might take GPT’s factual hook (“Research shows that…”) and Claude’s friendly anecdote.

- Research Prompt:“Summarize the latest findings on AI in healthcare from a 2024 scientific article.”

- GPT may give a precise summary if it’s been trained on or has access to up-to-date data, focusing on methodology and results.

- Claude might emphasize the human impact or ethical considerations of those findings, perhaps offering a narrative flair.

- Another model (like Gemini) might integrate bullet points or a quick chart if available.

By evaluating the accuracy and coverage of each summary, you can spot gaps. If GPT misses an important stat but Claude catches it (or vice versa), you’ll know to double-check. This cross-evaluation ensures critical details aren’t overlooked.

- Technical Prompt:“Write a Python function that implements quicksort and includes comments explaining each step.”

- GPT typically produces working code with explanatory comments, as it’s been heavily used for coding tasks.

- Claude might produce an alternative implementation or style, maybe using Python’s idioms differently.

- A specialized model like Codex (via Aizolo) or a Mistral code model might also be tested.

Comparing these outputs can be very practical: you might notice that GPT’s version is more verbose, while Claude’s is more concise, or vice versa. You could even combine – use GPT’s logic but adapt Claude’s variable names. Importantly, if one model’s code has a mistake (like an off-by-one error), the other might correct it. Side-by-side viewing reveals these technical differences instantly.

- Education Prompt:“Explain Einstein’s theory of relativity to a 10-year-old.”

- GPT might try to simplify the concept using analogies about speed or space, possibly sounding a bit formal.

- Claude might create a short story or visual analogy (e.g. trampoline representing spacetime) with a friendly tone.

- Gemini or others could include an illustrative example of an experiment.

Comparing helps ensure clarity: a teacher can pick the explanation that a student best understands. If Claude’s explanation misses a key detail, maybe GPT’s covers it. Or vice versa, one might be more engaging for young learners.

These examples show how you might literally copy the same prompt into multiple models and compare the text results. Tools like Aizolo will allow you to run all these prompts in columns, so you truly see them side-by-side. This is particularly useful when you need to evaluate different AI outputs for consistency or creativity. After running such examples, good practice is to review: which answer is most correct? Which has the best tone? Which one needs fact-checking? Doing this systematically trains you to “think critically” about AI-generated content.

Comparing Models: Tone, Accuracy, Creativity, and More

Having looked at examples and use cases, let’s summarize how different models typically perform on key criteria:

- Tone & Style: ChatGPT/GPT-4o is known to use clear, well-organized language, but can sound formal or dry at timesblog.type.aiblog.type.ai. In contrast, Claude (especially newer Sonnet 4) tends to be more expressive and human-like. As one analysis put it, “What makes Claude stand out… is how much more expressive it is than ChatGPT”blog.type.ai. Claude can easily write in various styles (conversational, humorous, etc.) and even “land a joke” when instructedblog.type.ai. Gemini, being Google’s large multimodal model, often produces text with a balanced style and may include suggestions of images or tables if relevantblog.type.ai. When comparing tone, an easy test is to ask all models for a creative task (like writing a farewell email) and note which one feels friendliest or most natural. The differences in tone are one reason educators and writers test multiple outputs: the best-sounding answer might come from a model you didn’t originally plan to use.

- Factual Accuracy and Hallucination: ChatGPT (GPT-4 series) often scores very well on factual tasks. In fact, GPT-4 Turbo has “one of the lowest hallucination rates” (~1.7%)blog.type.ai, meaning it rarely makes up wrong facts. Claude, built with strong safety in mind, also emphasizes factual consistency and ethics. The latest Claude 4 models have hybrid reasoning designed to improve fact-checkingblog.type.ai, and Anthropic claims they have “better safety guardrails and more rigorous fact-checking”blog.type.ai. However, every model can err; for example, earlier Gemini versions struggled with factual accuracy (Gemini 1.5 had ~9.1% hallucinations in some testsblog.type.ai), although Gemini 2.5 shows major improvementsblog.type.ai. When you compare outputs, factual discrepancies pop out immediately. If GPT says “1986” and Claude says “1979” for a historical date, you know to verify with a reliable source. Always double-check critical facts by cross-referencing outputs or asking the model to cite sources. This is one of the biggest benefits of multi-model testing: it encourages you not to take any single answer at face value.

- Creativity and Expressiveness: Claude generally excels in creative writing. In the Type.ai study, Claude’s answers were noted as concise yet “much more expressive” and human-likeblog.type.ai. It could write jokes and switch styles smoothly. GPT can also be creative, but in that comparison it came across as more “not terribly warm or clever”blog.type.ai, whereas Claude could deliver a pun. Gemini likewise has shown strong creative abilities – it ranked #1 on benchmarks for creative writingblog.type.ai. For brainstorming or content that needs “spark,” trying all three is wise: maybe Claude or Gemini will surprise you with a novel idea that GPT missed. Creative tasks are subjective, so side-by-side outputs help you judge which model’s style resonates. Content creators often do this: one model’s draft might be a great outline, another’s a better conclusion.

- Hallucination/Safety: Hallucinations (made-up facts) are related to accuracy but worth mentioning separately. As noted, GPT-4o and Claude 4 have some of the lowest rates thanks to training improvementsblog.type.aiblog.type.ai. They also implement guardrails: for example, Claude’s design explicitly prioritizes ethical, “human-aligned” responsesblog.type.ai. This means Claude might refuse or carefully reframe a request that seems unethical. GPT also has safety filters but sometimes can be more permissive. Gemini’s safety improvements are ongoing; Google vets its outputs especially when integrated into search products. When testing outputs, notice if any model refuses a prompt or gives a warning vs. another that simply answers. A safe model might say “Sorry, I cannot help with that,” which is useful info. In critical settings (legal, medical), you might prefer the model that errs on the side of caution (likely Claude or GPT with strict settings). Testing also helps reveal bias: if different models respond very differently to a sensitive question, that’s a cue to analyze further.

- Speed and Throughput: We mentioned the latency benchmarkslatestly.ai. The takeaway is that no single “fastest” model rules all cases. If you need lightning-fast answers (like for a live chat), you might choose a smaller open model (Mistral) or Claude 3.5 over GPT-4olatestly.ai. On the other hand, if you have more tolerance, GPT-4o’s extra processing time may be fine for better reliability. Gemini 1.5Pro was noted to be slower than the others, especially for long answerslatestly.ai. This is important if you’re testing bots in a live scenario: you can measure response times too. Aizolo lets you see all models’ outputs on the same prompt; you can time them. Alternatively, for APIs you might use speed tests like those by Latestly. In any case, considering speed vs. accuracy is key: sometimes the fastest model is “good enough”, and sometimes a slower model that double-checks facts is worth the wait.

- Cost Considerations: We touched on pricing briefly. All else equal, you might lean toward the cheaper option. Note that model speed and size often correlate with cost: GPT-4o is slower and costs more per token than a Mistral model. We saw that ChatGPT/Claude Pro plans are ~$18–$20/monthblog.type.ai, and Google One with Gemini is ~$19.99/monthblog.type.ai. If your application has a lot of traffic, per-request costs can add up. Multi-model testing can inform budget: if a less expensive model consistently meets your needs, stick with it. Also, some multi-model tools let you use your own API keys, so you pay only for usage (e.g. Aizolo can be set to use your keys). In any analysis, it’s helpful to note “cost per good answer” – something you discover by comparing how often a cheap model answers well vs. when you had to fall back to a pricier one.

Platforms and Tools for Multi-Model Testing

We’ve already mentioned several tools above, but here is a quick summary:

- Aizolo: Central for our purposes. It’s built around side-by-side model comparison. Use it for any intensive multi-model testing. (See Aizolo’s own blog for more details.)

- Poe: Great for comparing outputs across many popular models (GPT, Claude, Gemini, etc.) on chat-style promptsncbar.org.

- ChatHub: Best for a streamlined web interface that includes 20+ models. It’s praised for visual ease and affordabilitychathub.gg.

- Janitor AI: Useful if you want to craft character-based chats powered by different modelsdecodo.comdecodo.com.

- Ithy: Ideal for research synthesis tasks; it aggregates ChatGPT, Gemini, and Perplexity into a unified answerncbar.org.

- SNEOS: A quick, no-login way to get ChatGPT/GPT, Claude, and Gemini responses and side-by-side comparisonsncbar.org.

These tools make it easy to “test” your prompt on multiple engines. They handle all the API connections and UIs so you only focus on the content. Remember to be mindful of privacy and data policies: as the NC Bar guide warns, any sensitive query could be sent to third-party modelsncbar.org, so avoid confidential inputs.

The takeaway: pick the right tool for your workflow. For quick comparisons, Poe or SNEOS are easy to try. For deep research projects, Ithy or Aizolo’s workspace offer advanced features (like saving outputs, exporting data). Many creators even use multiple tools: Poe to prototype, Aizolo to scale up or to manage large queries.

Tips: Choosing the Right Model for Each Task

After testing, how do you choose? Here are some tips distilled from all the above:

- Match the Model to the Task: If you need creative flair, pick the model that produced the best style (e.g. Claude for storytelling). If you need factual precision, pick the one with the most correct details (often GPT-4o or Claude 4). For multimedia tasks (images, audio), use Gemini or specialized models.

- Check Hallucinations: If any answer seems suspicious, compare with another model or a trusted source. Never assume one AI is infallible. If two different models agree on a fact, it’s likelier to be true.

- Balance Speed vs. Quality: For time-sensitive tasks (live chat, rapid data), favor faster models or use streaming. If you can wait, use the more accurate model. Multi-model tests can quantify the trade-off (e.g. measure response times and error rates from each model on your key prompts).

- Consider Cost: If a cheaper model meets your needs, default to it to save budget. If not, allocate usage to the premium model for edge cases. Multi-model outputs can reveal how often the cheaper model is sufficient.

- Leverage Ensemble Answers: Sometimes the best answer is a combination. For instance, you might concatenate the strongest points from several model outputs into a single answer. Especially in writing or research, this can yield a richer result than any single model alone.

- Use Model-Switching Tools: When in doubt, use platforms like Aizolo or ChatHub to re-run failed or low-quality answers on another model. These tools are made for iterating on prompts across LLMs.

- Stay Updated: AI models evolve quickly. Today’s winner might be tomorrow’s warm-up act. The latest Claude, GPT, Gemini, Mistral models often change the landscape. Regularly test new versions (many tools list new models as they come out) so your insights remain current.

- Document Your Findings: Keep notes of which model did best on which type of query. This internal knowledge base (or “benchmark”) helps future decisions. For example, you might note “for legal text analysis, GPT-4o gave more accurate extractions in our testsvellum.ai”.

By following these tips and continuously evaluating, you’ll make the most of testing AI output across multiple models. Over time, this practice will improve both the quality of your AI-driven work and your understanding of the AI landscape.

FAQs

Q: Why is it important to compare AI outputs from multiple models?

A: Because each AI model has unique strengths and weaknesses. Comparing outputs side by side lets you catch mistakes (like factual errors) and choose the best answer style. It’s similar to how scholars consult multiple sources. Multi-model comparison ensures you don’t rely on a single perspective and helps identify which model is most suitable for your taskncbar.orgncbar.org.

Q: What are some top tools for comparing AI models?

A: Popular tools include Aizolo (an all-in-one comparison workspace), Poe (Quora’s AI chat app supporting GPT, Claude, Gemini, etc.)ncbar.org, ChatHub (browser app with many chatbot models)chathub.gg, Janitor AI (for character-driven chats with different backends)decodo.com, Ithy (aggregates ChatGPT/Gemini/Perplexity into one report)ncbar.org, and SNEOS (quick side-by-side comparison of GPT, Claude, Gemini)ncbar.org. Each has its strengths for different use cases.

Q: How do AI models typically differ in tone and factual accuracy?

A: In tests, GPT (OpenAI’s models) often provides very accurate, formal answers (low hallucination ~1.7%)blog.type.ai, while Claude (Anthropic’s models) tends to be more conversational and creativeblog.type.ai. Claude has strong ethical guardrails and can be more expressive, whereas GPT might write more dryly. Gemini (Google’s model) is especially good with multimodal inputs and creative tasks. We see from benchmarks that for complex reasoning, GPT still holds a slight edge, but Claude has caught up and excels in extended contextsblog.type.ai. The best way to know is to test: give all models the same prompt and compare the outputs for tone and accuracy.

Q: Can I use these multi-model testing platforms for free?

A: Many have free tiers. For example, Poe allows a limited number of free messagesncbar.org. ChatHub has a free plan to get started. Ithy and SNEOS have free versions with some limits (like a cap on monthly queries). Aizolo may offer a free trial. For heavy use, paid plans or API keys are needed. Always read the terms: free tools may share your prompts with model providers, so avoid confidential queriesncbar.org.

Q: How do I choose which model to use for my project?

A: It depends on your priorities. Use multi-model testing to gather data: see which model’s outputs you prefer in your domain. Generally, if you need factual reliability and you’re okay with a formal tone, a GPT-4 model is a safe bet. If you want engaging, human-like prose or have very long contexts, try Claude Sonnet 4 with its 1M-token windowblog.type.ai. For multimodal tasks (images/audio), try Gemini. Consider speed and cost too: for a fast, lightweight model, Mistral or Claude 3.5 might be better than GPT-4. The “right” model is often the one that minimizes issues in the multi-model tests you’ve run.

Internal Linking Suggestions

- Comparing GPT, Claude, and Gemini: Aizolo Blog post on how to pick the best AI model for writing tasks, with performance benchmarks and feature comparisons.

- Evaluating AI Hallucinations and Accuracy: Article on detecting factual errors in LLM outputs and using side-by-side comparisons to fact-check.

- Prompt Engineering for Multi-Model Tests: Guide to crafting prompts that reveal model differences and maximize useful comparisons.

- Best AI Model Testing Tools in 2025: A round-up of tools (like Aizolo, Poe, etc.) for evaluating multiple AI models at once.

Each of these (hypothetical) Aizolo Blog links would dive deeper into specific aspects of multi-model testing and model selection, reinforcing the concepts discussed above.

Summary: Testing AI output across multiple models is a crucial step for any researcher, educator, or creator who wants reliable, high-quality results. By using modern tools and following the comparisons above, you can harness the strengths of GPT, Claude, Gemini, Mistral, and others together. This ensures you choose the best model (or combination of models) for each task, reducing errors and unlocking creative possibilities.

Pingback: 7 Secure AI Memory for Personalized AI Chats | AiZolo Guide